MATH 55203–55303

Theory of Functions of a Complex Variable I–II

Fall 2025–Spring 2026

(Spring) SCEN 322, MWF 2:00–2:50 p.m.

Next class day: Friday, February 20, 2026

[Definition: The complex numbers] The set of complex numbers is \(\R ^2\), denoted \(\C \). (In this class, you may use everything you know about \(\R \) and \(\R ^2\)—in particular, that \(\R ^2\) is an abelian group and a normed vector space.)

[Definition: Real and imaginary parts] If \((x,y)\) is a complex number, then \(\re (x,y)=x\) and \(\im (x,y)=y\).

[Definition: Addition and multiplication] If \((x,y)\) and \((\xi ,\eta )\) are two complex numbers, we define

Let \((x,y)\) and \((\xi ,\eta )\) be two complex numbers. Then\begin{align*} (x,y)\cdot (\xi ,\eta )&=(x\xi -y\eta ,x\eta +y\xi ),\\ (\xi ,\eta )\cdot (x,y)&=(\xi x-\eta y,\xi y+\eta x). \end{align*}Because multiplication in the real numbers is commutative, we have that

\begin{align*} (\xi ,\eta )\cdot (x,y)&=(x\xi -y\eta ,y\xi + x\eta ). \end{align*}Because addition in the real numbers is commutative, we have that

\begin{align*} (\xi ,\eta )\cdot (x,y)&=(x\xi -y\eta , x\eta +y\xi )=(x,y)\cdot (\xi ,\eta ) \end{align*}as desired.

[Definition: Notation for the complex numbers]

If \(r\in \R \), we identify \(r\) with the number \((r,0)\in \C \).

We let \(i\) denote \((0,1)\).

[Definition: Conjugate] The conjugate to the complex number \(x+iy\), where \(x\), \(y\) are real, is \(\overline {x+iy}=x-iy\).1

\(z\bar z\) is a positive real number, and we know from real analysis that positive real numbers have reciprocals. Thus \(\frac {1}{z\bar z}\in \R \). We can multiply complex numbers by real numbers, so \(\frac {1}{z\bar z}\bar z\) is a complex number and it is the \(w\) of the problem statement.

[Definition: Modulus] If \(z\) is a complex number, we define its modulus \(|z|\) as \(|z|=\sqrt {z\bar z}\).

The conclusion is that \(|z+w|\leq |z|+|w|\) for all \(z\), \(w\in \C \). This is Proposition 1.2.3 in your textbook.

If \(\{z_n\}_{n=1}^\infty \) is a sequence of points in \(\C \) and \(z\in \C \), then \(z_n\to z\) if and only if both \(\re z_n\to \re z\) and \(\im z_n\to \im z\).

[Definition: Maclaurin series] If \(f:\R \to \R \) is an infinitely differentiable function, then the Maclaurin series for \(f\) is the power series

This is Proposition 1.2.4 in your book. If \(n\in \N \), and if \(z_1\), \(z_2,\dots ,z_n\) and \(w_1\), \(w_2,\dots ,w_n\) are complex numbers, then\begin{equation*}\Bigl |\sum _{k=1}^n z_k\,w_k\Bigr |^2\leq \sum _{k=1}^n |z_k|^2 \sum _k |w_k|^2.\end{equation*}We can prove this as follows. By the triangle inequality, \(|z_1w_1+z_2w_2|\leq |z_1w_1|+|z_2w_2|=|z_1||w_1|+|z_2||w_2|\). A straightforward induction argument yields that

\begin{equation*}\Bigl |\sum _{k=1}^n z_k\,w_k\Bigr | \leq \sum _{k=1}^n|z_k||w_k|.\end{equation*}Applying the real Cauchy-Schwarz inequality with \(x_k=|z_k|\) and \(\xi _k=|w_k|\) completes the proof.

It converges to \(e^x\).

An induction argument establishes that\begin{align*}\re i^k &= \begin {cases} 0, &k\text { is odd},\\1,&k\text { is even and a multiple of~$4$},\\-1,&k\text { is even and not a multiple of~$4$},\end {cases} \end{align*}and

\begin{align*} \im i^k &= \begin {cases} 0, &k\text { is even},\\1,&k\text { is odd and one more than a multiple of~$4$},\\-1,&k\text { is even and one less than a multiple of~$4$}.\end {cases} \end{align*}We then see that we may write the Maclaurin series for \(\cos \) and \(\sin \) as

\begin{equation*}\cos (y)=\sum _{k=0}^\infty \re i^k \frac {y^k}{k!}, \qquad \sin (y)=\sum _{k=0}^\infty \im i^k \frac {y^k}{k!} .\end{equation*}We then have that\begin{equation*}\re \Bigl (\sum _{k=0}^n \frac {(iy)^k}{k!}\Bigr ) =\sum _{k=0}^n \re \biggl (\frac {(iy)^k}{k!}\biggr ) =\sum _{k=0}^n \re i^k\frac {y^k}{k!}\end{equation*}which converges to \(\cos y\) as \(n\to \infty \). Similarly\begin{equation*}\im \Bigl (\sum _{k=0}^n \frac {(iy)^k}{k!}\Bigr ) =\sum _{k=0}^n \im \biggl (\frac {(iy)^k}{k!}\biggr ) =\sum _{k=0}^n \im i^k\frac {y^k}{k!}\end{equation*}converges to \(\sin y\) as \(n\to \infty \). Thus the series \(\sum _{k=0}^\infty \frac {(iy)^k}{k!}\) converges to \(\cos y+i\sin y\), as desired.

[Definition: The complex exponential] If \(x\) is real, we define

Using the sum angle identities for sine and cosine, we compute\begin{align*} \exp (iy+i\eta ) &=\exp (i(y+\eta )) = \cos (y+\eta )+i\sin (y+\eta ) \\&= \cos y\cos \eta - \sin y\sin \eta + i\sin y\cos \eta +i \cos y\sin \eta \end{align*}and

\begin{align*} \exp (iy)\exp (i\eta )&= (\cos y+i\sin y)(\cos \eta +i\sin \eta ) \\&= \cos y\cos \eta - \sin y\sin \eta + i\sin y\cos \eta +i \cos y\sin \eta \end{align*}and observe that they are equal.

There are real numbers \(x\), \(y\), \(\xi \), \(\eta \) such that \(z=x+iy\) and \(w=\xi +i\eta \).By definition

\begin{equation*}\exp (z)=\exp (x)\exp (iy),\qquad \exp (w)=\exp (\xi )\exp (i\eta ).\end{equation*}Because multiplication in the complex numbers is associative and commutative,\begin{align*}\exp (z)\exp (w) &=[\exp (x)\exp (iy)][\exp (\xi )\exp (i\eta )] =[\exp (x)\exp (\xi )][\exp (iy)\exp (i\eta )] .\end{align*}By properties of exponentials in the real numbers and by the previous problem, we see that

\begin{align*} \exp (z)\exp (w) &=\exp (x+\xi )\exp (iy+i\eta ) .\end{align*}By definition of the complex exponential,

\begin{align*}\exp (z)\exp (w)&=\exp ((x+\xi )+i(y+\eta ))=\exp (z+w)\end{align*}as desired.

We know from real analysis that, if \((x,y)\) lies on the unit circle, then \((x,y)=(\cos \theta ,\sin \theta )\) for some real number \(\theta \). By definition of complex modulus, if \(|z|=1\) and \(z=x+iy\) then \((x,y)\) lies on the unit circle. Thus \(z=\cos \theta +i\sin \theta =\exp (i\theta )\) for some \(\theta \in \R \).Infinitely many such numbers \(\theta \) exist.

[Chapter 1, Problem 25] If \(\theta \), \(\varpi \in \R \), then \(e^{i\theta }=e^{i\varpi }\) if and only if \((\theta -\varpi )/(2\pi )\) is an integer.

Observe that \(|re^{i\theta }|=r|e^{i\theta }|\) because \(r\geq 0\) and because the modulus distributes over products. But \(|e^{i\theta }|=|\cos \theta +i\sin \theta |=\sqrt {\cos ^2\theta +\sin ^2\theta }=1\), and so the only choice for \(r\) is \(r=|z|\).If \(z=0\) then we must have that \(r=0\) and can take any real number for \(\theta \).

If \(z\neq 0\), let \(r=|z|\). Then \(w=\frac {1}{r}z\) is a complex number with \(|z|=1\), and so there exist infinitely many values \(\theta \) with \(e^{i\theta }=w\) and thus \(z=re^{i\theta }\).

Suppose that \(z=re^{i\theta }\) for some \(r\geq 0\), \(\theta \in \R \).Then \(z^6=r^6 e^{6i\theta }\). If \(z^6=i\), then \(1=|i|=|z^6|=r^6\) and so \(r=1\) because \(r\geq 0\). We must then have that \(i=e^{6i\theta }\). Observe that \(i=e^{i\pi /2}\). By Homework 1.25, we must have that \(6\theta =\pi /2+2\pi n\) for some \(n\in \Z \), and so \((e^{i\theta })^6=i\) if and only if \(\theta =\pi /12+n \pi /3\). Thus the solutions are

\begin{equation*}e^{\pi /12},\quad e^{5\pi /12},\quad e^{9\pi /12},\quad e^{13\pi /12},\quad e^{17\pi /12},\quad e^{21\pi /12}.\end{equation*}Any other solution is of the form \(e^{i\theta }\), where \(\theta \) differs from one of the listed numbers by \(2\pi \).

[Definition: Real polynomial] Let \(p:\R \to \R \) be a function. We say that \(p\) is a (real) polynomial in one (real) variable if there is a \(n\in \N _0\) and constants \(a_0\), \(a_1,\dots ,a_n\in \R \) such that \(p(x)=\sum _{k=0}^n a_k x^k\) for all \(x\in \R \).

[Definition: Real polynomial in two variables] Let \(p:\R ^2\to \R \) be a function. We say that \(p\) is a (real) polynomial in two (real) variables if there is a \(n\in \N _0\) and constants \(a_{k,\ell }\in \R \) such that \(p(x,y)=\sum _{k=0}^n \sum _{\ell =0}^n a_{k,\ell } x^k y^\ell \) for all \(x\), \(y\in \R \).

\(p\) and \(q\) are infinitely differentiable functions from \(\R \) to \(\R \), and because \(p(x)=q(x)\) for all \(x\in \R \), we must have that \(p'=q'\), \(p''=q'',\dots ,p^{(k)}=q^{(k)}\) for all \(k\in \N \).We compute \(p^{(k)}(0)=k!a_k\) and \(q^{(k)}(0)=k!b_k\). Setting them equal we see that \(a_k=b_k\).

[Definition: Degree] If \(p(z)=\sum _{k=0}^n a_k\,z^k\), then the degree of \(p\) is the largest nonnegative integer \(m\) such that \(a_m\neq 0\). (The degree of the zero polynomial \(p(z)=0\) is either undefined, \(-1\), or \(-\infty \).)

If \(p\) is the zero polynomial we may take \(q\) to also be the zero polynomial. If \(p\) is a nonzero constant polynomial then no such \(x_0\) can exist. We therefore need only consider the case where \(p\) is a polynomial of degree \(m\geq 1\).If \(m=1\), then \(p(x)=a_1x+a_0\) for some \(a_1\), \(a_0\); if \(p(x_0)=0\) then \(a_0=-a_1x_0\) and so \(p(x)=a_1(x-x_0)\). Then \(q(x)=a_1\) is a polynomial of degree \(0=m-1\).

Suppose that the statement is true for all polynomials of degree at most \(m-1\), \(m\geq 2\). Let \(p\) be a polynomial of degree \(m\). Then \(p(x)=a_m x^m +r(x)\) where \(r\) is a polynomial of degree at most \(m-1\). We add and subtract \(a_m x_0 x^{m-1}\) to see that

\begin{equation*}p(x)=a_m x^{m-1} (x-x_0) + a_mx_0 x^{m-1}+r(x).\end{equation*}Then \(s(x)=a_mx_0 x^{m-1}+r(x)\) is a polynomial of degree at most \(m-1\). If \(s\) is a constant then \(0=p(x_0)=a_m x_0^{m-1}(x-x_0)+s\) and so \(s=0\); taking \(q(x)=a_mx^{m-1}\) we are done.Otherwise, \(s(x)\) is a polynomial of degree at least one and at most \(m-1\). Also, \(s(x_0)=p(x_0)-a_mx_0^m(x_0-x_0)=0\), so by the induction hypothesis \(s(x)=(x-x_0)t(x)\) for a polynomial \(t\) of degree at most \(m-2\). Taking \(q(x)=a_mx^{m-1}+t(x)\) we are done.

Let \(r(x)=p(x)-q(x)\). Then \(r(x_j)=p(x_j)-q(x_j)=0\) for all \(0\leq j\leq n\) and \(r\) is a polynomial of degree at most \(n\). Furthermore, \(r(x_j)=0\) for all \(0\leq j\leq n\).

Suppose for the sake of contradiction that \(r\) is not identically equal to zero. Then \(r\) is a polynomial of degree \(m\), \(0\leq m\leq n\). By 410Problem 410,

\begin{equation*}r(x)=(x-x_1)(x-x_2)\dots (x-x_m)r_m(x)\end{equation*}where \(r_m\) is a polynomial of degree \(m-m\), that is, a constant. But\begin{equation*}0=p(x_0)-q(x_0)=r(x_0)=(x_0-x_1)(x_0-x_2)\dots (x_0-x_m) r_m(x_0).\end{equation*}Since \(x_j\neq x_0\) for all \(j\geq 1\) we must have that \(r_m(x_0)=0\); thus \(r_m\) is the constant function zero and so \(r\) is the constant function zero, as was to be proven. (This is technically a contradiction to the assumption \(m\geq 0\) because if \(m\geq 0\) then \(r\) is not the zero polynomial.)

Fix a \(y\in \R \). Then \(p_y(x)=\sum _{j=0}^n \Bigl (\sum _{k=0}^n a_{j,k}y^k\Bigr ) x^j\) and \(q_y(x)=\sum _{j=0}^n \Bigl (\sum _{k=0}^n b_{j,k}y^k\Bigr ) x^j\) are both polynomials in one variable that are equal for all \(x\). So by 400Problem 400 their coefficients must be equal, so \(\Bigl (\sum _{k=0}^n a_{j,k}y^k\Bigr )=\Bigl (\sum _{k=0}^n b_{j,k}y^k\Bigr )\). This is true for all \(y\in \R \); another application of 400Problem 400 shows that \(a_{j,k}=b_{j,k}\) for all \(j\) and \(k\).

The polynomial \(p(x,y)=xy\) is such a polynomial, because \(p(0,y)=0\) and \(p(x,0)=0\) for all \(x\in \R \) or \(y\in \R \), and there are infinitely many \(x\in \R \) and infinitely many \(y\in \R \).

Definition 1.3.1 (part 1). Let \(\Omega \subseteq \R ^2\) be open. Suppose that \(f:\Omega \to \R \). We say that \(f\) is continuously differentiable, or \(f\in C^1(\Omega )\), if the two partial derivatives \(\frac {\partial f}{\partial x}\) and \(\frac {\partial f}{\partial y}\) exist everywhere in \(\Omega \) and \(f\), \(\frac {\partial f}{\partial x}\), and \(\frac {\partial f}{\partial y}\) are all continuous on \(\Omega \).

Let \(z=(x,y)\). Let \((\xi ,\eta )\in B((x,y),r)\).We consider the case \(\xi \geq x\) and \(\eta \geq y\); the cases \(\xi <x\) or \(\eta <y\) are similar. Then \(\{(t,y):x\leq t\leq \xi \}\subset B((x,y),r)\), and if we let \(F_y(x)=F(x,y)\), then \(F_y\) is a continuously differentiable function on \([x,\xi ]\) with \(F_y'(t)=0\) for all \(x\leq t\leq \xi \); by the Mean Value Theorem, \(F_y(x)=F_y(\xi )\) and so \(f(x,y)=f(\xi ,y)\). Similarly, \(\{(\xi ,t):y\leq t\leq \eta \}\subset B((x,y),r)\), and so \(f(x,y)=f(\xi ,y)=f(\xi ,\eta )\).

Thus \(f\) is a constant in \(B((x,y),r)\).

[Definition: Complex polynomials in one variable] Let \(p:\C \to \C \) be a function. We say that \(p\) is a polynomial in one (complex) variable if there is a \(n\in \N _0\) and constants \(a_0\), \(a_1,\dots ,a_n\in \C \) such that \(p(z)=\sum _{k=0}^n a_k z^k\) for all \(z\in \C \).

[Definition: Complex polynomial in two variables] Let \(p:\C \to \C \) be a function. We say that \(p\) is a polynomial in two real variables if there is a \(n\in \N _0\) and constants \(a_{k,\ell }\in \C \) such that \(p(x+iy)=\sum _{k=0}^n \sum _{\ell =0}^n a_{k,\ell } x^k y^\ell \) for all \(x\), \(y\in \R \).

[Chapter 1, Problem 35] The functions \(p(x+iy)=x\) and \(q(x+iy)=x^2\) are both clearly polynomials of two real variables. Prove that neither is a polynomial of one complex variable.

Definition 1.3.1 (part 2). Let \(\Omega \subseteq \C \) be an open set. Recall \(\C =\R ^2\). Let \(f:\Omega \to \C \) be a function. Then \(f\in C^1(\Omega )\) if \(\re f\), \(\im f\in C^1(\Omega )\).

[Definition: Derivative of a complex function] Let \(f\in C^1(\Omega )\). Let \(u(z)=\re f(z)\) and let \(v(z)=\im f(z)\). Then

Let \(f=u+iv\), \(g=w+i\varpi \), where \(u\), \(v\), \(w\), and \(\varpi \) are real-valued functions in \(C^1(\Omega )\).Then \(fg=(uw-v\varpi )+i(vw+u\varpi )\), where \((uw-v\varpi )\) and \((vw+u\varpi )\) are both real-valued \(C^1\) functions.

Then

\begin{align*} \frac {\partial }{\partial x} (fg) &=\frac {\partial }{\partial x} [(uw-v\varpi )+i(vw+u\varpi )] \\&= \frac {\partial }{\partial x}(uw-v\varpi )+i\frac {\partial }{\partial x}(vw+u\varpi ). \end{align*}Applying the Leibniz (product) rule for real-valued functions, we see that

\begin{align*} \frac {\partial }{\partial x} (fg) &=\frac {\partial u}{\partial x}w + u\frac {\partial w}{\partial x}-\frac {\partial v}{\partial x}\varpi -v\frac {\partial \varpi }{\partial x} \\&\qquad + i\frac {\partial v}{\partial x}w + iv\frac {\partial w}{\partial x}+i\frac {\partial u}{\partial x}\varpi +iu\frac {\partial \varpi }{\partial x}. \end{align*}Furthermore,

\begin{align*} f\frac {\partial g}{\partial x}+\frac {\partial f}{\partial x} g &= (u+iv) \biggl (\frac {\partial w}{\partial x}+i\frac {\partial \varpi }{\partial x}\biggr ) \\&\qquad +\biggl (\frac {\partial u}{\partial x}+i\frac {\partial v}{\partial x}\biggr )(w+i\varpi ) \\&= u\frac {\partial w}{\partial x}+iu\frac {\partial \varpi }{\partial x}+iv\frac {\partial w}{\partial x}-v\frac {\partial \varpi }{\partial x}\\&\qquad +\frac {\partial u}{\partial x}w+i\frac {\partial u}{\partial x}\varpi +i\frac {\partial v}{\partial x}w -\frac {\partial v}{\partial x}\varpi . \end{align*}Rearranging, we see that the two terms are the same.

[Definition: Complex derivative] Let \(f\in C^1(\Omega )\). Then

Recall that \(z=x+iy\). Thus,\begin{equation*}\frac {\partial }{\partial z} (z) = \frac {1}{2}\frac {\partial }{\partial x}(x+iy)+\frac {1}{2i}\frac {\partial }{\partial y}(x+iy) =\frac {1}{2}+\frac {i}{2i}=1 \end{equation*}and\begin{equation*}\frac {\partial }{\partial \bar z} (z) = \frac {1}{2}\frac {\partial }{\partial x}(x+iy)-\frac {1}{2i}\frac {\partial }{\partial y}(x+iy) =\frac {1}{2}-\frac {i}{2i}=0 .\end{equation*}Recall that \(\bar z=x-iy\). Thus,

\begin{equation*}\frac {\partial }{\partial z} (\bar z) = \frac {1}{2}\frac {\partial }{\partial x}(x-iy)+\frac {1}{2i}\frac {\partial }{\partial y}(x-iy) =\frac {1}{2}-\frac {i}{2i}=0 \end{equation*}and\begin{equation*}\frac {\partial }{\partial \bar z} (\bar z) = \frac {1}{2}\frac {\partial }{\partial x}(x-iy)-\frac {1}{2i}\frac {\partial }{\partial y}(x-iy) =\frac {1}{2}+\frac {i}{2i}=1 .\end{equation*}

This follows immediately from linearity of the differential operators \(\frac {\partial }{\partial x}\) and \(\frac {\partial }{\partial y}\).

We have that\begin{align*} \frac {\partial }{\partial z} (fg) =\frac {1}{2}\frac {\partial }{\partial x} (fg) +\frac {1}{2i}\frac {\partial }{\partial y} (fg) .\end{align*}Using the Leibniz rules for \(\frac {\partial }{\partial x}\) and \(\frac {\partial }{\partial y}\), we see that

\begin{align*} \frac {\partial }{\partial z} (fg) &=\frac {1}{2}f\frac {\partial g}{\partial x} +\frac {1}{2}\frac {\partial f}{\partial x} g +\frac {1}{2i}f\frac {\partial g}{\partial y}+\frac {1}{2i}\frac {\partial f}{\partial y}g \\&= f\biggl (\frac 12\frac {\partial g}{\partial x}+\frac {1}{2i}\frac {\partial g}{\partial y}\biggr ) +\biggl (\frac {1}{2}\frac {\partial f}{\partial x}+\frac {1}{2i}\frac {\partial f}{\partial y}\biggr )g \\&=f\frac {\partial g}{\partial z}+\frac {\partial f}{\partial z}g .\end{align*}The argument for \(\frac {\partial }{\partial \bar z}\) is similar.

If \(\ell =m=0\), then \(z^\ell \bar z^m=1\) and \(\ell z^{\ell -1}\bar z^m=0=mz^\ell \bar z^{m-1}\). The result is obvious in this case.Suppose now that \(m=0\), \(\ell \geq 1\) and the result is true for \(\ell -1\). (The result is true for \(\ell -1\) if \(\ell =1\) by the above argument.) By 570Fact 570,

\begin{align*} \p { z} z^\ell &=\p { z}(z\cdot z^{\ell -1}) = \biggl (\p { z}z\biggr )z^{\ell -1}+z\biggl (\p { z}z^{\ell -1}\biggr ), \\ \p {\bar z} z^\ell &=\p {\bar z}(z\cdot z^{\ell -1}) = \biggl (\p {\bar z}z\biggr )z^{\ell -1}+z\biggl (\p {\bar z}z^{\ell -1}\biggr ), \end{align*}which by the induction hypothesis and 540Problem 540 equal

\begin{align*} \p { z} z^\ell = z^{\ell -1}+z\cdot (\ell -1)z^{\ell -2}, \\ \p {\bar z} z^\ell = 0\cdot z^{\ell -1}+z\cdot 0, \end{align*}which simplify to the desired result. Thus by induction the result is true whenever \(m=0\). A similar induction argument yields the result whenever \(\ell =0\). Finally, the general case follows from 570Fact 570 as follows:

\begin{align*} \frac {\partial }{\partial z} (z^\ell \bar z^m)&= \biggl (\p {z}z^\ell \biggr )\bar z^m+z^\ell \biggl (\p { z}\bar z^m\biggr ) = \ell z^{\ell -1}\bar z^m+0,\\ \frac {\partial }{\partial \bar z} (z^\ell \bar z^m)&= \biggl (\p {\bar z}z^\ell \biggr )\bar z^m+z^\ell \biggl (\p {\bar z}\bar z^m\biggr ) = 0+ z^{\ell }\cdot m\bar z^{m-1} \end{align*}as desired.

If \(p\) is a polynomial in one complex variable, then by definition there are constants \(a_k\in \C \) and \(n\in \N \) such that \(p(z)=\sum _{k=0}^n a_k z^k\). By linearity of the complex derivative operator and by 580Problem 580, we have that \(\p {\bar z} p(z)=0\), as desired.Now suppose that \(p\) is a complex polynomial in two real variables and \(\p {\bar z} p(z)=0\) for all \(z\in \C \). By 510Problem 510, there are constants \(b_{k,\ell }\in \C \) and \(m\in \N \) such that

\begin{equation*}p(z)=\sum _{k=0}^m \sum _{\ell =0}^m b_{k,\ell } z^k\bar z^\ell \end{equation*}for all \(z\in \C \). By linearity of the complex derivative operator and by 580Problem 580, we have that\begin{equation*}\p {\bar z} p(z)=\sum _{k=0}^m \sum _{\ell =0}^m \ell b_{k,\ell } z^k\bar z^{\ell -1}.\end{equation*}This polynomial is identically equal to zero. By 511Problem 511, this implies that \(\ell b_{k,\ell }=0\) for all \(k\) and \(\ell \). In particular, if \(\ell \geq 1\) then \(b_{k,\ell }=0\). Thus\begin{equation*}p(z)=\sum _{k=0}^m b_{k,0} z^k\end{equation*}as desired.

Definition 1.4.1. Let \(\Omega \subseteq \C \) be open and let \(f\in C^1(\Omega )\). We say that \(f\) is holomorphic in \(\Omega \) if

We observe that\begin{equation*}\p [f]{x}=\p [f]{z}+\p [f]{{\bar z}},\qquad \p [f]{y}=i\p [f]{z}-i\p [f]{{\bar z}}.\end{equation*}Thus \(\p [f]{x}=\p [f]{y}=0\) in \(\Omega \) and the result follows from 480Problem 480.

[Chapter 1, Problem 34] Suppose that \(\Omega \subseteq \C \) is open and that \(f\in C^1(\Omega )\). Show that

Observe that \(\frac {1}{z}=\frac {\bar z}{z\bar z}\) and so if \(z=x+iy\), \(x\), \(y\in \R \), then \(\frac {1}{z}=\frac {x-iy}{x^2+y^2}\). We compute\begin{align*}\p {x}\frac {1}{z}&= \p {x}\re \frac {1}{z} +i\p {x}\im \frac {1}{z}= \p {x}\frac {x}{x^2+y^2} +i\p {x}\frac {-y}{x^2+y^2} = \frac {y^2-x^2+2ixy}{(x^2+y^2)^2}, \\ \p {y}\frac {1}{z}&= \p {y}\frac {x}{x^2+y^2} +i\p {y}\frac {-y}{x^2+y^2} = \frac {-ix^2+iy^2-2xy}{(x^2+y^2)^2}. \end{align*}Thus

\begin{align*}\p {z}\frac {1}{z} &=\frac {1}{2} \p {x}\frac {1}{z}-\frac {i}{2}\p {y}\frac {1}{z} = \frac {y^2-x^2+2ixy}{(x^2+y^2)^2} \\&= \frac {-(x-iy)^2}{(x+iy)^2(x-iy)^2} =-\frac {1}{z^2} \end{align*}and

\begin{equation*}\p {z}\frac {1}{z} =\frac {1}{2} \p {x}\frac {1}{z}+\frac {i}{2}\p {y}\frac {1}{z} = 0. \end{equation*}Using the Leibniz rule for the inductive step, a straightforward induction argument shows that\begin{equation*}\p {z}\frac {1}{z^n}=-\frac {n}{z^{n+1}}, \qquad \p {\bar z}\frac {1}{z^n} =0.\end{equation*}

[Chapter 1, Problem 49] Let \(\Omega \), \(W\subseteq \C \) be open and let \(g:\Omega \to W\), \(f:W\to \C \) be two \(C^1\) functions. The following chain rules are valid:

In particular, if \(f\) and \(g\) are both holomorphic then so is \(f\circ g\).

Lemma 1.4.2. Let \(f\in C^1(\Omega )\), let \(u=\re f\), and let \(v=\im f\). Then \(f\) is holomorphic in \(\Omega \) if and only if

Recall that\begin{align*} 2\p [f]{\bar z} = \p [f]{x} +i\p [f]{y} \end{align*}by definition of \(\p {\bar z}\). Applying the fact that \(f=u+iv\), we see that

\begin{align*} 2\p [f]{\bar z}&=\p [u]{x}+i\p [v]{x} +i \biggl (\p [u]{y}+i\p [v]{y}\biggr ) \\&= \biggl (\p [u]{x}-\p [v]y\biggr )+ i\biggl (\p [v]x+\p [u]y\biggr ). \end{align*}Because \(u\) and \(v\) are real-valued, so are their derivatives. Thus, the real and imaginary parts of the right hand side, respectively, are \(\p [u]x-\p [v]y\) and \(\p [u]y+\p [v]x\).

Thus, \(\p [f]{\bar z} = 0\) if and only if the Cauchy-Riemann equations hold.

Recall that \(\exp \) is given by \(\exp (x+iy)=e^x(\cos y+i\sin y)=e^x\cos y+ie^x\sin y\).Writing \(u(x,y)=e^x\cos y\), \(v(x,y)=e^x\sin y\), we have that

\begin{equation*}\frac {\partial u}{\partial x} = e^x\cos y=\frac {\partial v}{\partial y}, \quad \frac {\partial u}{\partial y} = -e^x\sin y=-\frac {\partial v}{\partial x},\end{equation*}and so \(u\) and \(v\) satisfy the Cauchy-Riemann equations; thus \(f(x,y)=u(x,y)+iv(x,y)=\exp (x+iy)\) is holomorphic.

Proposition 1.4.3. [Slight generalization.] Let \(f\in C^1(\Omega )\). Then \(f\) is holomorphic at \(p\in \Omega \) if and only if \(\frac {\partial f}{\partial x}(p)=\frac {1}{i}\frac {\partial f}{\partial y}(p)\) and that in this case

By definition of \(\p {z}\) and \(\p {\bar z}\), if \(f\in C^1(\Omega )\) then \(\p [f]{x}=\p [f]{z}+\p [f]{\bar z}\) and \(\p [f]{y}=i\p [f]{z}-i\p [f]{\bar z}\). Thus, if \(\p [f]{\bar z}(p)=0\) then \(\p [f]{x}(p)=\p [f]{z}(p)\) and \(\p [f]{y}(p)=i\p [f]{z}(p)=i\p [f]{x}(p)\), as desired.

Recall\begin{equation*}\p [f]{\bar z} = \frac 12\biggl (\p [f]x- \frac {1}{i}\p [f]{y}\biggr ).\end{equation*}Thus, if \(\p [f]x=\frac {1}{i}\p [f]y\) then \(\p [f]{\bar z}=0\), as desired.

Definition 1.4.4. We let \(\triangle =\frac {\partial ^2 }{\partial x^2}+\frac {\partial ^2 }{\partial y^2}\). If \(\Omega \subseteq \C \) is open and \(u\in C^2(\Omega )\), then \(u\) is harmonic if

We compute that\begin{align*}\p {z}\p [f]{\bar z} &= \frac {1}{4} \biggl (\p x+\frac {1}{i}\p y\biggr ) \biggl (\p [f] x-\frac {1}{i} \p [f] y\biggr ) \\&=\frac {1}{4}\biggl (\frac {\partial ^2f}{\partial x^2} +\frac {\partial ^2f}{\partial y^2} +\frac {1}{i}\frac {\partial ^2 f}{\partial y\,\partial x} -\frac {1}{i}\frac {\partial ^2 f}{\partial x\,\partial y}\biggr ) .\end{align*}If \(f\in C^1\) then \(\frac {\partial ^2 f}{\partial y\,\partial x} =\frac {\partial ^2 f}{\partial x\,\partial y}\) and the proof is complete. The argument for \(\p {\bar z}\p [f]{z} \) is similar.

Because \(f\) is holomorphic,\begin{equation*}\triangle f = 4\frac {\partial }{\partial z}\frac {\partial f} {\partial \bar z}=4\frac {\partial }{\partial z}0=0.\end{equation*}But\begin{equation*}\triangle f = (\triangle u) + i(\triangle v)\end{equation*}and \(\triangle u\) and \(\triangle v\) are both real-valued, so because \(\triangle f=0\) we must have \(\triangle u=0=\triangle v\) as well.

By 600Fact 600, \(f\) is a polynomial in one complex variable; that is, there is a \(n\in \N \) and constants \(a_k\in \C \) such that\begin{equation*}f(z)=\sum _{k=0}^n a_k\,z^k\end{equation*}for all \(z\in \C \). Let \(b\in \C \) and let\begin{equation*}F(z)=b+\sum _{k=0}^n \frac {a_k}{k+1}\,z^{k+1}.\end{equation*}By 580Problem 580, we have that \(\p {z}F=f\).There are infinitely many such polynomials, one for each choice of \(b\). By 610Problem 610, any two such antiderivatives \(F_1\) and \(F_2\) must differ by a constant.

Because \(u\) is harmonic, by 680Fact 680 we have that\begin{equation*}0=\triangle u=4\frac {\partial }{\partial \bar z} \frac {\partial u}{\partial z}\end{equation*}and so \(f=\p [u]{z}\) is holomorphic. By 580Problem 580 we have that \(f\) is a polynomial. Thus by 700Problem 700 there is a holomorphic polynomial \(F\) with \(\p [F]{z}=f=\p [u]{z}\).Let \(p=F\). Let \(g(z)=u(z)-p(z)\). Then \(g\) is a polynomial and \(\p [g]{z}=0\), so by Problem 1.34 \(\p [\overline g]{\bar z}=0\). Thus

\begin{equation*}\overline {g(z)}=\sum _{k=0}^m b_k \,z^k\end{equation*}for some \(m\in \N \) and some constants \(b_k\in \C \). Taking the complex conjugate yields that\begin{equation*}g(z)=\sum _{k=0}^m \overline {b_k}\,\bar z^k.\end{equation*}This completes the proof with \(q(z)=\sum _{k=0}^m \overline {b_k}\, z^k\).

Lemma 1.4.5. Let \(u\) be harmonic and real valued in \(\C \). Suppose in addition that \(u\) is a polynomial of two real variables. Then there is a holomorphic polynomial \(f\) such that \(u(z)=\re f(z)\).

By 710Problem 710, we have that \(u(z)=p(z)+q(\bar z)\) for some polynomials \(p\) and \(q\). We may write\begin{equation*}u(z)=\sum _{k=0}^n a_k z^k+\sum _{k=0}^n b_k \bar z^k =(a_0+b_0)+\sum _{k=1}^n a_k z^k+\sum _{k=1}^n b_k \bar z^k\end{equation*}for some \(n\in \N \) and some \(a_k\), \(b_k\in \C \). Because \(u\) is real-valued, we have that \(u(z)=\overline {u(z)}\) and so\begin{equation*} (a_0+b_0)+\sum _{k=1}^n a_k z^k+\sum _{k=1}^n b_k \bar z^k = \overline {(a_0+b_0)}+\sum _{k=1}^n \overline {b_k} z^k+\sum _{k=1}^n \overline {a_k} \bar z^k. \end{equation*}By 511Problem 511, this implies that \(a_0+b_0\) is real and that \(a_k=\overline {b_k}\) for all \(k\geq 1\).Thus

\begin{align*}u(z) &=(a_0+b_0)+ \sum _{k=1}^n (a_k z^k+\overline {a_k z^k}) =\re (a_0+b_0)+ \sum _{k=1}^n 2\re (a_k z^k) \\&=\re \Bigl ((a_0+b_0)+ \sum _{k=1}^n 2a_k z^k\Bigr )\end{align*}as desired.

Let \(\gamma :[0,1]\to \R ^2\) be a piecewise \(C^1\) simple closed curve.2 Let \(\Omega \subset \R ^2\) be the bounded open set that satisfies \(\partial \Omega =\gamma ([0,1])\); by the Jordan curve theorem, exactly one such \(\Omega \) exists. Let \(W\) be open and satisfy \(\overline \Omega \subset W\). Let \(\vec F:W\to \R ^2\) be a \(C^1\) function.Then

\begin{equation*}\int _0^1 \vec F(\gamma (t))\cdot \gamma '(t)\,dt = \pm \int _\Omega \frac {\partial F_2}{\partial x}-\frac {\partial F_1}{\partial y}\,dx\,dy\end{equation*}where the sign is determined by the orientation of \(\gamma \).

This will be proven as homework.

This is routine calculation. By the quotient rule of undergraduate calculus,\begin{equation*}\p {x}g = \frac {1(x^2+y^2)-x(2x)}{(x^2+y^2)^2} = \frac {y^2-x^2}{(x^2+y^2)^2} \end{equation*}and\begin{equation*}\p {y}h = \frac {-1(x^2+y^2)-(-y)(2y)}{(x^2+y^2)^2} = \frac {y^2-x^2}{(x^2+y^2)^2}\end{equation*}which are equal.

The domain \(\Omega \) is not simply connected.

Let \(g=\frac {\partial u}{\partial x}\) and let \(h=-\frac {\partial u}{\partial y}\). Then\begin{equation*}\p [g]{x}=\frac {\partial ^2 u}{\partial x^2} = -\frac {\partial ^2 u}{\partial y^2}=\p [h]{y}\end{equation*}by definition of \(g\) and \(h\) and because \(u\) is harmonic. Thus by 790Bonus Problem 790 there is a \(v:\Omega \to \R \) such that \(\p [v]{y}=g=\frac {\partial u}{\partial x}\) and \(\p [v]{x}=h=-\frac {\partial u}{\partial y}\).Then \(u\) and \(v\) satisfy the Cauchy-Riemann equations, and so by 650Problem 650, \(f=u+iv\) is holomorphic in \(\Omega \).

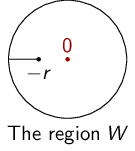

[Chapter 1, Problem 52] The function \(f(z)=1/z\) is holomorphic on \(\Omega =\{z\in \C :1<|z|<2\}\) but has no holomorphic antiderivative on \(\Omega \).

where \(\cdot \) is the dot product in the vector space \(\R ^2\), and \(\gamma _1\), \(\gamma _2:(a,b)\to \R \) are the \(C^1\) functions such that \(\gamma (t)=(\gamma _1(t),\gamma _2(t))\).

[Definition: Continuous] Let \((X,d)\) and \((Z,\rho )\) be two metric spaces and let \(f:X\to Z\). We say that \(f\) is continuous at \(x\in X\) if, for all \(\varepsilon >0\), there is a \(\delta >0\) such that if \(d(x,y)<\delta \) and \(y\in X\) then \(\rho (f(x),f(y))<\varepsilon \).

Let \(m\), \(M\in [c,d]\) with \(\varphi (m)\leq \varphi (t)\leq \varphi (M)\) for all \(t\in [c,d]\). Such \(m\) and \(M\) exist by 872Memory 872.Suppose for the sake of contradiction that \(\varphi (m)<\varphi (c)\) and \(\varphi (m)<\varphi (d)\). Then \(c\neq m\neq d\) and so \(c<m<d\). Let \(y\) satisfy \(\varphi (m)<y<\min (\varphi (c),\varphi (d))\). By the intermediate value theorem, there are points \(t_1\) and \(t_2\) with \(c<t_1<m<t_2<d\) (in particular, \(t_1\neq t_2\)) and \(\varphi (t_1)=y=\varphi (t_2)\). But then \(\varphi \) is not injective. This contradicts our assumption on \(\varphi \), and so either \(\varphi (m)=\varphi (c)\) and so \(m=c\), or \(\varphi (m)=\varphi (d)\) and so \(m=d\).

Similarly, \(M\in \{c,d\}\). Since \(\varphi \) is injective, \(c<d\), and so \([c,d]\) contains more than one point, we have that \(M\neq m\) and so either \(M=c\), \(m=d\) or \(m=c\), \(M=d\).

If \(m=c\), \(M=d\), then \(\varphi (c)<\varphi (t)<\varphi (d)\) for all \(t\in (c,d)\). If \(c\leq t_1<t_2\leq d\) and \(\varphi (t_1)>\varphi (t_2)\), then another application of the intermediate value theorem contradicts injectivity of \(\varphi \), and so \(\varphi \) must be increasing; because \(\varphi \) is injective it must be strictly increasing. Similarly, if \(m=d\), \(M=c\), then \(\varphi \) is strictly decreasing, as desired.

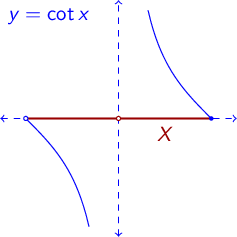

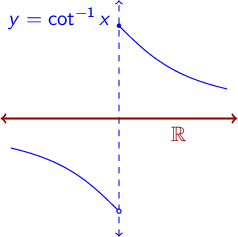

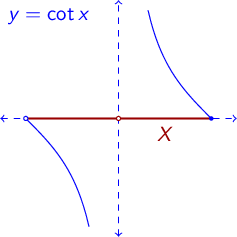

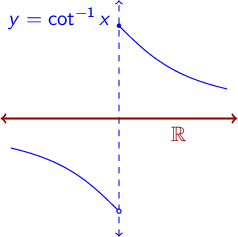

No. Let \(X=(-\pi /2,0)\cup (0,\pi /2]\subset \R \) with the usual metric on \(\R \). Then the function \(\cot :X\to \R \) is continuous on \(X\) and is a bijection, but \(\cot ^{-1}(0)=\frac {\pi }{2}\) and \(\lim _{x\to 0^-}\cot ^{-1}(x)=-\frac {\pi }{2}\), and so \(\cot ^{-1}\) (with the given range) is discontinuous at \(0\).

Definition 2.1.1. (\(C^1\) on a closed set.) Let \([a,b]\subseteq \R \) be a closed bounded interval and let \(f:[a,b]\to \R \). We say that \(f\in C^1([a,b])\), or \(f\) is continuously differentiable on \([a,b]\), if

[Definition: One-sided derivative] If \(f:[a,b]\to \R \), we define \(f'(a)=\lim _{t\to a^+} \frac {f(t)-f(a)}{t-a}\) and \(f'(b)=\lim _{t\to b^-} \frac {f(b)-f(t)}{b-t}\), if these limits exist.

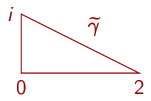

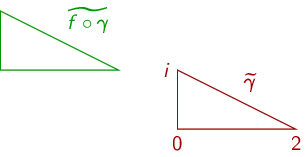

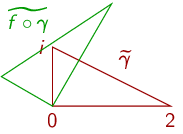

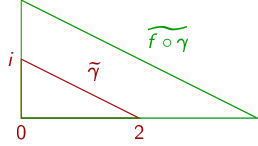

[Definition: Curve] A curve in \(\R ^2\) is a continuous function \(\gamma :[a,b]\to \C \), where \([a,b]\subseteq \R \) is a closed and bounded interval. The trace (or image) of \(\gamma \) is \(\widetilde \gamma =\gamma ([a,b])=\{\gamma (t):t\in [a,b]\}\).

[Definition: Closed; simple] A curve \(\gamma :[a,b]\to \R ^2\) is closed if \(\gamma (a)=\gamma (b)\). A closed curve is simple if \(\gamma (b)=\gamma (a)\) and \(\gamma \) is injective on \([a,b)\) (equivalently on \((a,b]\)).

[Definition: \(C^1\) curve in \(\R ^2\)] A curve \(\gamma :[a,b]\to \R ^2\) is \(C^1\) (or continuously differentiable) if \(\gamma (t)=(\gamma _1(t),\gamma _2(t))\) for all \(t\in [a,b]\) and both \(\gamma _1\), \(\gamma _2\) are \(C^1\) on \([a,b]\). We write

[Definition: Arc length] If \(\gamma :[a,b]\to \R ^2\) is a \(C^1\) curve, then its length (or arc length) is \(\int _a^b \|\gamma '(t)\|\,dt\).

Proposition 2.1.4. Let \(\gamma \in C^1([a,b])\), \(\gamma :[a,b]\to \Omega \) for some open set \(\Omega \subseteq \R ^2\) and let \(f:\Omega \to \R \) with \(f\in C^1(\Omega )\). Then

By the multivariable chain rule,\begin{equation*}\frac {d(f\circ \gamma )}{dt}= \frac {\partial f}{\partial x}\Big \vert _{(x,y)=\gamma (t)}\frac {\partial \gamma _1}{\partial t} +\frac {\partial f}{\partial y}\Big \vert _{(x,y)=\gamma (t)}\frac {\partial \gamma _2}{\partial t}.\end{equation*}The result then follows from the fundamental theorem of calculus.

[Definition: Real line integral] Let \(\gamma \in C^1([a,b])\), \(\gamma :[a,b]\to \Omega \) for some open set \(\Omega \subseteq \R ^2\) and \(F:\Omega \to \R \) be continuous on \(\Omega \). We define

Definition 2.1.3. (Integral of a complex function.) If \(f:[a,b]\to \C \), and both \(\re f\) and \(\im f\) are integrable on \([a,b]\), we define \(\int _a^b f=\int _a^b\re f+i\int _a^b \im f\).

Proposition 2.1.7. Suppose that \(a<b\) and that \(f:[a,b]\to \C \) is continuous. Then \(|\int _a^b f|\leq \int _a^b |f|\leq (b-a)\sup _{[a,b]}|f|\).

First,\begin{align*} \biggl |\int _a^b f\biggr | &\leq \biggl |\re \int _a^b f\biggr |+\biggl |\im \int _a^b f\biggr | = \biggl |\int _a^b \re f\biggr |+\biggl |\int _a^b \im f\biggr |. \end{align*}By continuity of \(\re f\) and \(\im f\) and compactness of \([a,b]\), we have that \(\sup _{[a,b]}|\re f|<\infty \) and \(\sup _{[a,b]}|\im f|<\infty \) and so the integral is finite by 870Memory 870.

If \(\int _a^b f=0\) we are done. Otherwise, let \(\theta \in \R \) be such that \(e^{i\theta }\int _a^b f\) is a nonnegative real number. Then \(|\int _a^b f|=\int e^{i\theta } f\). But \(|\int _a^b f|\) is real and so \(\im \int e^{i\theta } f=0\). Therefore \(|\int _a^b f|=\re \int e^{i\theta } f =\int \re (e^{i\theta } f)\). The result then follows from the corresponding result for real integrals.

Definition 2.1.2. (\(C^1\) curve in \(\C \).) A curve \(\gamma :[a,b]\to \C \) is a \(C^1\) curve (in \(\C \)) if \((\re \gamma ,\im \gamma )\) is a \(C^1\) curve (in \(\R ^2\)). We write

We compute that\begin{align*} \lim _{s\to t}\frac {\gamma (s)-\gamma (t)}{s-t} &=\lim _{s\to t}\biggl ( \frac {\re \gamma (s)-\re \gamma (t)}{s-t} +i\frac {\im \gamma (s)-\im \gamma (t)}{s-t}\biggr ). \end{align*}By linearity of limits

\begin{align*} \lim _{s\to t}\frac {\gamma (s)-\gamma (t)}{s-t} &=\left ( \lim _{s\to t}\frac {\re \gamma (s)-\re \gamma (t)}{s-t}\right )+i\left (\lim _{s\to t}\frac {\im \gamma (s)-\im \gamma (t)}{s-t}\right ) = (\re \gamma )'(t)+i(\im \gamma )'(t)\end{align*}as desired.

Definition 2.1.5. (Complex line integral.) Let \(\gamma \in C^1([a,b])\), \(\gamma :[a,b]\to \Omega \) for some \(\Omega \subseteq \C \), and let \(F:\Omega \to \C \) be continuous on \(\Omega \). We define

Show that

Proposition 2.1.6. Let \(\gamma :[a,b]\to \Omega \subseteq \C \) be \(C^1\), where \(\Omega \) is open, and let \(f\) be holomorphic in \(\Omega \). Then

Let \(f(z)=\overline {z}\). Then \(\frac {\partial f}{\partial z}=0\) and so the left hand side is zero, but \(\overline z\) is one-to-one and so the right hand side is not zero unless \(\gamma \) is closed; for example, if \(\gamma (t)=t\), \(a=0\), \(b=1\), then \(f(\gamma (b))-f(\gamma (a))=1\neq 0\).

By the fundamental theorem of calculus, we have that\begin{align*}f(\gamma (b))-f(\gamma (a)) &=\bigl [\re f(\gamma (b))-\re f(\gamma (a))\bigr ] + i\bigl [\im f(\gamma (b))-\im f(\gamma (a))\bigr ] \\&= \int _a^b \frac {d}{dt} \re f(\gamma (t)) \,dt + i \int _a^b \frac {d}{dt} \im f(\gamma (t)) \,dt \\&= \int _a^b \frac {d}{dt} \re f(\gamma (t)) + i \frac {d}{dt} \im f(\gamma (t)) \,dt \\&= \int _a^b \frac {d}{dt} f(\gamma (t)) \,dt .\end{align*}By 841Memory 841 (with \(\gamma '(t)\) viewed as a vector in \(\R ^2\))

\begin{align*}\frac {d}{dt} \re f(\gamma (t)) &= \nabla (\re f)(\gamma (t)) \cdot \gamma '(t) \,dt .\end{align*}Let \(\gamma (t)=\gamma _1(t)+i\gamma _2(t)=(\gamma _1(t),\gamma _2(t))\) where \(\gamma _1\), \(\gamma _2\) are real valued functions. Then

\begin{align*}\frac {d}{dt} \re f(\gamma (t)) &= \p [(\re f)]{x}(\gamma (t))\,\gamma _1'(t) +\p [(\re f)]{y}(\gamma (t))\,\gamma _2'(t) .\end{align*}Similarly

\begin{align*}\frac {d}{dt} \im f(\gamma (t)) &= \p [(\im f)]{x}(\gamma (t))\,\gamma _1'(t) +\p [(\im f)]{y}(\gamma (t))\,\gamma _2'(t) \end{align*}and so

\begin{align*}\frac {d}{dt} f(\gamma (t)) &= \frac {d}{dt} \re f(\gamma (t)) + i \frac {d}{dt} \im f(\gamma (t)) \\&= \p [ f]{x}(\gamma (t))\,\gamma _1'(t) +\p [f]{y}(\gamma (t))\,\gamma _2'(t) .\end{align*}By 660Problem 660, and because \(f\) is holomorphic, we have that

\begin{align*}\frac {d}{dt} f(\gamma (t)) &= \p [ f]{z}(\gamma (t))\,\gamma _1'(t) +i\p [f]{z}(\gamma (t))\,\gamma _2'(t) \\&=\p [ f]{z}(\gamma (t))\,\gamma '(t) .\end{align*}Thus

\begin{align*}f(\gamma (b))-f(\gamma (a)) &= \int _a^b \frac {d}{dt} f(\gamma (t)) \,dt \\&= \int _a^b \p [ f]{z}(\gamma (t))\,\gamma '(t) \,dt .\end{align*}By definition

\begin{equation*}\oint _\gamma \frac {\partial f}{\partial z}\,dz = \int _a^b \p [f]{z}(\gamma (t))\,\gamma '(t)\,dt.\end{equation*}This completes the proof.

Proposition 2.1.8. If \(\gamma :[a,b]\to \Omega \subseteq \C \) is a \(C^1\) curve and \(f:\Omega \to \C \) is continuous, then \(\displaystyle \left |\oint _\gamma f(z)\,dz\right |\leq \sup _{[a,b]} |f\circ \gamma | \cdot \ell (\gamma )=\sup _{\widetilde \gamma } |f| \cdot \ell (\gamma )\), where \(\ell (\gamma )=\int _a^b |\gamma '|\).

By definition\begin{equation*}\oint _\gamma f(z)\,dz = \int _a^b f(\gamma (t))\,\gamma '(t)\,dt. \end{equation*}By 940Problem 940\begin{align*} \biggl |\oint _\gamma f(z)\,dz\biggr | &\leq \int _a^b |f(\gamma (t))|\,|\gamma '(t)|\,dt \leq \sup _{[a,b]} |f\circ \gamma | \int _a^b |\gamma '(t)|\,dt. \end{align*}Recalling the definition of arc length completes the proof.

Proposition 2.1.9. Let \(\Omega \subseteq \C \), let \(F:\Omega \to \C \) be continuous, let \(\gamma _1:[a,b]\to \Omega \) be a \(C^1\) curve, and let \(\varphi :[c,d]\to [a,b]\) be \(C^1\) and bijective. Define \(\gamma _2=\gamma _1\circ \varphi \).

If \(\varphi \) is strictly increasing, then \(\oint _{\gamma _1} F(z)\,dz=\oint _{\gamma _2} F(z)\,dz\).

More precisely, let \(\widetilde \gamma \subseteq \Omega \subseteq \C \). Suppose that there is at least one injective or simple closed \(C^1\) curve \(\gamma _1:[a,b]\to \Omega \) such that \(\widetilde \gamma _1=\widetilde \gamma \).

If \(\gamma _2:[c,d]\to \Omega \) is any other injective or simple closed \(C^1\) curve with \(\widetilde \gamma _2=\widetilde \gamma \), then either \(\oint _{\gamma _1} F(z)\,dz=\oint _{\gamma _2} F(z)\,dz\) for all functions \(F:\Omega \to \C \) continuous, or \(\oint _{\gamma _1} F(z)\,dz=-\oint _{\gamma _2} F(z)\,dz\) for all functions \(F:\Omega \to \C \) continuous.

Furthermore, if \(\gamma _1\) and \(\gamma _2\) are injective, if \(z_1\), \(z_2\in \widetilde \gamma \), and if \(\gamma _1^{-1}(z_1)<\gamma _1^{-1}(z_2)\) and \(\gamma _2^{-1}(z_1)<\gamma _2^{-1}(z_2)\), then \(\oint _{\gamma _1} F(z)\,dz=\oint _{\gamma _2} F(z)\,dz\) for all functions \(F:\Omega \to \C \) continuous.

Finally, if \(\gamma _1\) and \(\gamma _2\) are simple closed curves, if \(z_1\), \(z_2\), \(z_3\in \widetilde \gamma \), if \(\gamma _1(a)=\gamma _1(b)=\gamma _2(c)=\gamma _2(d)=z_3\), and if \(\gamma _1^{-1}(z_1)<\gamma _1^{-1}(z_2)\) and \(\gamma _2^{-1}(z_1)<\gamma _2^{-1}(z_2)\), then \(\oint _{\gamma _1} F(z)\,dz=\oint _{\gamma _2} F(z)\,dz\) for all functions \(F:\Omega \to \C \) continuous.

By definition \(\gamma _1:[a,b]\to \widetilde \gamma _1\) is a bijection. 880Memory 880 and 890Memory 890, we have that the inverse \(\gamma _1^{-1}\) is also continuous. Thus \(\varphi =\gamma _1^{-1}\circ \gamma _2\) is a continuous bijection from \([c,d]\) to \([a,b]\) that satisfies \(\gamma _2=\gamma _1\circ \varphi \). By 873Problem 873, and because \(\varphi (c)=\gamma _1^{-1}(\gamma _2(c))=a\), we have that \(\varphi \) is strictly increasing.

No. Let \(\gamma _1(t)=e^{it}\) and let \(\gamma _2=e^{-it}\), both with domain \([-\pi ,\pi ]\). If \(\gamma _2(t)=\gamma _1(\varphi (t))\), then \(\varphi (t)=-t\) for all \(t\in (-\pi ,\pi )\), and so in particular \(\varphi \) cannot be strictly increasing.

The function\begin{equation*}\gamma _3(t)=\begin {cases} \gamma _1(b+(b-a)t^3), & -1\leq t\leq 0,\\ \gamma _2(c+(d-c)t^3),& 0\leq t\leq 1\end {cases}\end{equation*}satisfies the given conditions. Note in particular that \(\gamma _3'(0)=0\).

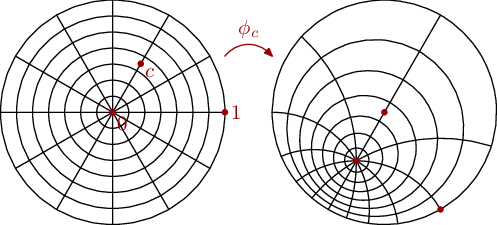

By definition\begin{equation*}\oint _{u\circ \gamma } f(w)\,dw=\int _0^1 f(u\circ \gamma (t)) \, (u\circ \gamma )'(t)\,dt, \qquad \oint _\gamma f(u(z))\,\frac {\partial u}{\partial z}\,dz =\int _0^1 f(u(\gamma (t))) \,\frac {\partial u}{\partial z}\big \vert _{z=\gamma (t)} \,\gamma '(t)\,dt.\end{equation*}As in the proof of 970Problem 970, because \(u\) is holomorphic we have that \((u\circ \gamma )'(t)=\frac {\partial u}{\partial z}\big \vert _{z=\gamma (t)} \,\gamma '(t)\). This completes the proof.

[Definition: Limit in metric spaces] If \((X,d)\) and \((Z,\rho )\) are metric spaces, \(p\in Z\), and \(f:Z\setminus \{p\}\to X\), we say that \(\lim _{z\to p}f(z)=\ell \) if, for all \(\varepsilon >0\), there is a \(\delta >0\) such that if \(z\in Z\) and \(0<\rho (z,p)<\delta \), then \(d(f(z),f(p))<\varepsilon \).

[Definition: Continuous function on metric spaces] If \((X,d)\) and \((Z,\rho )\) are metric spaces and \(f:Z\to X\), we say that \(f\) is continuous at \(p\in Z\) if \(f(p)=\lim _{z\to p} f(z)\).

[Definition: Disc] The open disc (or ball) in \(\C \) of radius \(r\) and center \(p\) is \(D(p,r)=B(p,r)=\{z\in \C :|z-p|<r\}\). The closed disc (or ball) in \(\C \) of radius \(r\) and center \(p\) is \(\overline D(p,r)=\overline B(p,r)=\{z\in \C :|z-p|\leq r\}\).

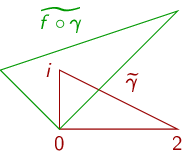

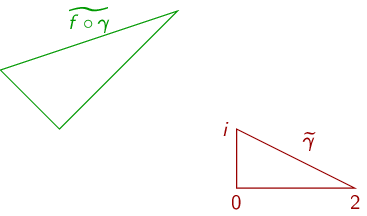

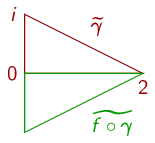

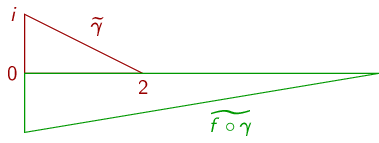

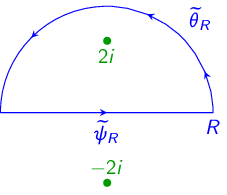

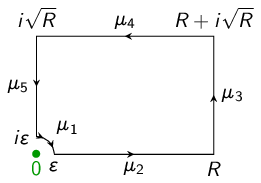

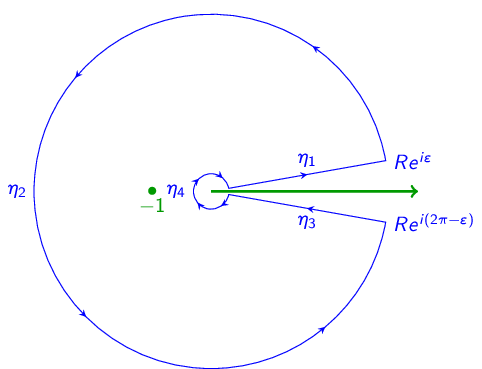

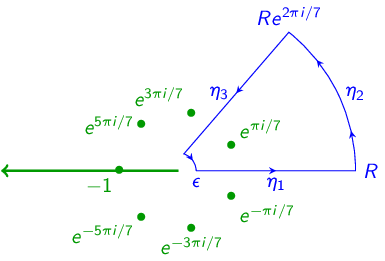

We chose \(\gamma \) to be a parameterization of:

Then:

- (a)

- \(f(z)=z-3+i\)

- (b)

- \(f(z)=(\frac {1}{2}+\frac {\sqrt {3}}{2}i)z\)

- (c)

- \(f(z)=2z\)

- (d)

- \(f(z)=(1+i)z\)

- (e)

- \(f(z)=(1+i)z-3+i\)

- (f)

- \(f(z)=\bar z\)

- (g)

- \(f(z)=z+2\bar z\)

[Definition: Complex derivative] Let \(p\in \Omega \subseteq \C \), where \(\Omega \) is open. Let \(f:\Omega \to \C \). Suppose that \(\lim _{z\to p} \frac {f(z)-f(p)}{z-p}\) exists. Then we say that \(f\) has a complex derivative at \(p\) and write \(f'(p)=\lim _{z\to p} \frac {f(z)-f(p)}{z-p}\).

[Chapter 2, Problem 10] If \(f\) has a complex derivative at \(p\), then \(f\) is continuous at \(p\).

[Chapter 2, Problem 13] If \(\Omega \subseteq \C \) is open, \(p\in \Omega \), and \(f\), \(g:\Omega \to \C \) are functions that have complex derivatives at \(p\), and if \(\alpha \), \(\beta \in \C \), then \(\alpha f+\beta g\) and \(fg\) have complex derivatives at \(p\) and

If in addition \(g(p)\neq 0\) then \(f/g\) has a complex derivative at \(p\) and

Theorem 2.2.2. Suppose that \(f\) has a complex derivative at \(p\). Then \(\frac {\partial f}{\partial z}\big \vert _{z=p}=f'(p)\).

First, observe that\begin{equation*}\frac {\partial f}{\partial x}\Big \vert _{x+iy=p} = \lim _{\substack {s\to 0\\s\in \R }} \frac {f(p+s)-f(p)}{s}.\end{equation*}Because \(f'(p)=\lim _{z\to p}\frac {f(z)-f(p)}{z-p}\), by definition of limit, for every \(\varepsilon >0\) there is a \(\delta >0\) such that if \(z\in \C \) and \(0<|z|<\delta \) then \(\left |\frac {f(p+z)-f(p)}{z}-f'(p)\right |<\varepsilon \). This in particular is true if \(s\) is real and \(0<|s|<\delta \), as real numbers are complex numbers. Thus we must have that \(f'(p)=\frac {\partial f}{\partial x}\big \vert _{x+iy=p}\).Similarly,

\begin{equation*}\frac {\partial f}{\partial y}\Big \vert _{x+iy=p} = \lim _{\substack {s\to 0\\s\in \R }} \frac {f(p+is)-f(p)}{s} = i\lim _{\substack {s\to 0\\s\in \R }} \frac {f(p+is)-f(p)}{is}=if'(p) .\end{equation*}We thus compute\begin{equation*}\frac {\partial f}{\partial z}\bigg \vert _{z=p} =\frac {1}{2}\biggl (\p [f]{x}\bigg \vert _{x+iy=p}+\frac {1}{i} \p [f]{y}\bigg \vert _{x+iy=p}\biggr ) =f'(p)\end{equation*}and\begin{equation*}\frac {\partial f}{\partial \bar z}\bigg \vert _{z=p} =\frac {1}{2}\biggl (\p [f]{x}\bigg \vert _{x+iy=p}-\frac {1}{i} \p [f]{y}\bigg \vert _{x+iy=p}\biggr ) =0.\end{equation*}

Let\begin{equation*}F(z)=\begin {cases} \frac {f(z)-f(g(t_0))}{z-g(t_0)},& z\in \Omega \setminus \{g(t_0)\},\\ f'(g(t_0)),&z=g(t_0).\end {cases}\end{equation*}Because \(f'(g(t_0))\) exists, \(\lim _{z\to g(t_0)} F(z)=F(g(t_0))\).Now,

\begin{align*}\lim _{s \to t_0} \frac {f\circ g(s)-f\circ g(t_0)}{s-t_0} &=\lim _{s \to t_0} F(g(s)) \frac {g(s)-g(t_0)}{s-t_0} \\&=f'(g(t_0))\,g'(t_0).\end{align*}

Theorem 2.2.1. (Generalization.) Suppose that \(\Omega \subseteq \C \) is open and that \(f\) is \(C^1\) on \(\Omega \). Let \(p\in \Omega \) and suppose \(\p [f]{\bar z}\big \vert _{z=p}=0\). Then \(f\) has a complex derivative at \(p\) and \(f'(p)=\frac {\partial f}{\partial z}\big \vert _{z=p}\).

We compute that (for \(j=1\) or \(j=2\))\begin{align*} F_j(x,y)&= F_j(x,y)-F_j(x,0)+F_j(x,0)-F_j(0,0)+F_j(0,0) \\&= F_j(0,0)+\int _0^x \frac {\partial }{\partial s} F_j(s,0)\,ds + \int _0^y \p {t} F_j(x,t)\,dt. \end{align*}Because the integrands are constants, they may easily be evaluated to yield the desired result.

Define \(\gamma :[0,1]\to \Omega \) by \(\gamma (t)=p+t(z-p)\). Then by Proposition 2.1.6, we have that\begin{equation*}f(\zeta )-f(p)=\oint _{\gamma } \frac {\partial f}{\partial z}\,dz.\end{equation*}By Theorem 2.2.2 ( 1110Problem 1110), \(\frac {\partial f}{\partial z}=f'(z)=f'(0)\) because \(f'\) is constant. The result follows by definition of line integral.

Theorem 2.2.3.1. Let \(z_0\in \Omega \subseteq \C \) for some open set \(\Omega \). Let \(f:\Omega \to \C \). Let \(w_1\), \(w_2\in \C \) with \(w_1\), \(w_2\neq 0\). Suppose that \(f'(z_0)\) exists. Then \(\lim _{t\to 0} \frac {|f(z_0+tw_1)-f(z_0)|}{|tw_1|}=\lim _{t\to 0} \frac {|f(z_0+tw_2)-f(z_0)|}{|tw_2|}\).

[Chapter 2, Problem 12] Let \(z_0\in \Omega \subseteq \C \) for some open set \(\Omega \). Let \(f:\Omega \to \C \). Suppose that \(\lim _{t\to 0} \frac {|f(z_0+tw_1)-f(z_0)|}{|tw_1|}=\lim _{t\to 0} \frac {|f(z_0+tw_2)-f(z_0)|}{|tw_2|}\) for all \(w_1\), \(w_2\in \C \setminus \{0\}\). Then either \(f'(z_0)\) exists or \((\overline f)'(z_0)\) exists.

[Definition: Unit vector] If \(z\in \C \setminus \{0\}\), define \(\hat z=\frac {1}{|z|}z\).

[Definition: Directed angle] Let \(z\), \(w\in \C \setminus \{0\}\). Let \(\angle (z,w)\) be the directed angle from \(z\) to \(w\) (that is, the angle between the line from \(0\) to \(z\) and the line from \(0\) to \(w\), chosen such that you move from \(z\) to \(w\) counterclockwise).

[Definition: Angle preserving] Let \(z_0\in \Omega \subseteq \C \) for some open set \(\Omega \). Let \(f:\Omega \to \C \). We say that \(f\) preserves angles at \(z_0\) if, for all \(w_1\), \(w_2\in \C \setminus \{0\}\), we have that

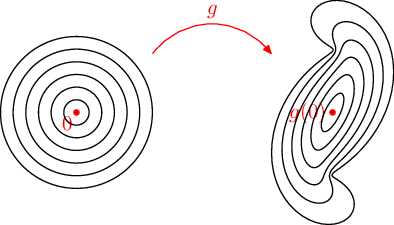

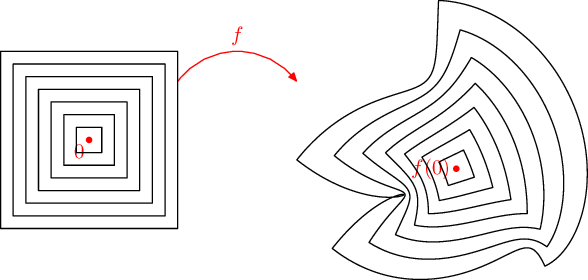

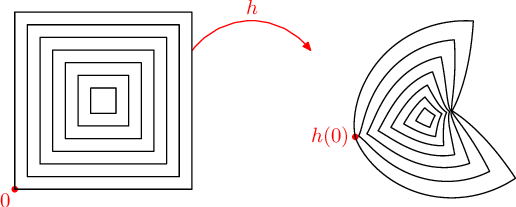

Theorem 2.2.3.2. If \(f'(z_0)\) exists and is not zero, then \(f\) preserves angles at \(z_0\).

[Chapter 2, Problem 9a] If \(f\) is \(C^1\) and preserves angles at \(z_0\), and if the Jacobian matrix of \(f\) at \(z_0\) is nonsingular, then \(f'(z_0)\) exists.

Lemma 2.3.1. Suppose that \(a<p<b\) and let \(H:(a,b)\to \R \) be continuous. Suppose that \(H\) is differentiable on both \((a,p)\) and \((p,b)\), and that \(\lim _{x\to p} H'(x)=h\) for some \(h\in \R \). Then \(H'(p)\) exists and \(H'(p)=\lim _{x\to p} H'(x)\).

Recall [ 780Problem 780]: Suppose that there are two \(C^1\) functions \(g\) and \(h\) defined in an open rectangle or disc \(\Omega \) such that \(\frac {\partial }{\partial x}g=\frac {\partial }{\partial y}h\). Then there is a function \(f\in C^2(\Omega )\) such that \(\frac {\partial f}{\partial y}=g\) and \( \frac {\partial f}{\partial x}=h\).

Theorem 2.3.2. Let \(\Omega \subset \R ^2\) be an open rectangle or disc and let \(P\in \Omega \). Suppose that there are two functions \(g\) and \(h\) that are continuous on \(\Omega \), continuously differentiable on \(\Omega \setminus \{P\}\), and such that \(\frac {\partial }{\partial x}g=\frac {\partial }{\partial y}h\) on \(\Omega \setminus \{P\}\) for some \(P\in \Omega \). Then there is a function \(f\in C^1(\Omega )\) such that \(\frac {\partial f}{\partial y}=g\) and \( \frac {\partial f}{\partial x}=h\) everywhere in \(\Omega \) (including at \(P\)).

Let \(P=(x_0,y_0)\) and let \(f(x,y)=\int _{x_0}^x h(s,y_0)\,ds+\int _{y_0}^y g(x,t)\,dt\). Observe that if \((x,y)\in \Omega \) then so is \((s,y_0)\) and \((x,t)\) for all \(s\) between \(x_0\) and \(x\) and all \(t\) between \(y_0\) and \(y\). Thus \(g\) and \(h\) are defined at all required values. Because \(g\) and \(h\) are continuous, the integrals exist.Furthermore, I claim \(f\) is continuous. Let \((x,y)\in \Omega \) and let \(\delta _1>0\) be such that \(B((x,y),\delta _1)\subset \Omega \). By continuity of \(g\) and \(h\) and compactness of \(\overline B((x,y),\delta _1/2)\), \(g\) and \(h\) are bounded on \(\overline B((x,y),\delta _1/2)\). If \((\xi ,\eta )\in B((x,y),\delta _1/2)\), then

\begin{align*} f(\xi ,\eta )-f(x,y) &= \int _x^{\xi } h(s,y_0)\,ds + \int _y^{\eta } g(\xi ,t)\,dt + \int _{y_0}^y g(\xi ,t)-g(x,t)\,dt \end{align*}and so

\begin{align*} |f(\xi &,\eta )-f(x,y)| \\&\leq |\xi -x|\sup _{\overline B((x,y),\delta _1/2)} |h| +|\eta -y|\sup _{\overline B((x,y),\delta _1/2)} |g| + \biggl |\int _{y_0}^y g(x,t)-g(\xi ,t)\,dt\biggr | .\end{align*}Furthermore, \(g\) must be uniformly continuous on \(\overline B((x,y),\delta _1/2)\). Choose \(\varepsilon >0\) and let \(\delta _2\) be such that if \(|(x,t)-(\xi ,t)|<\delta _2\) then \(|g(x,t)-g(\xi ,t)|<\varepsilon \). We then have that

\begin{align*} |f(x&,y)-f(\xi ,\eta )| \leq |\xi -x|\sup _{\overline B((x,y),\delta _1/2)} |h| +|\eta -y|\sup _{\overline B((x,y),\delta _1/2)} |g| +|y_0-y|\varepsilon .\end{align*}There is then a \(\delta _3>0\) such that if \(|(\xi ,\eta )-(x,y)|<\delta _3\) then \(|f(x,y)-f(\xi ,\eta )|<(1+|y_0-y|)\varepsilon \), and so \(f\) is continuous at \((x,y)\), as desired.

By the fundamental theorem of calculus, we have that \(\frac {\partial f}{\partial y}=g\) everywhere in \(\Omega \), including at \((x,y)=(x_0,y_0)=P\).

Furthermore, by 770Problem 770 and the fundamental theorem of calculus, we have that if \(x\neq x_0\) then

\begin{equation*}\frac {\partial f}{\partial x}(x,y) =h(x,y_0)+\int _{y_0}^y \frac {\partial g}{\partial x}(x,t)\,dt.\end{equation*}Again because \(x\neq x_0\), we have that \(\p [g]{x}(x,t)=\p [h]{t}(x,t)\) and so by the fundamental theorem of calculus \(\p [f]{x}=h\) provided \(x\neq x_0\).We need only show that \(\p [f]{x}=h\) even if \(x=x_0\). Fix a \(y\) and let \(F_y(x)=f(x,y)\). Then \(I=\{x\in \R :(x,y)\in \Omega \}\) is an open interval. We need only consider the case where \(I\neq \emptyset \). Then \(F_y\) is continuous on \(I\), \(F_y'(x)=h(x,y)\) for all \(x\neq x_0\), and \(\lim _{x\to x_0} F_y'(x)=h(x_0,y)\) because \(h\) is continuous. Thus by Lemma 2.3.1, \(F_y'(x_0)=h(x_0,y)\) and so \(\p [f]{x}=h\) even if \(x=x_0\).

Theorem 2.3.3. Let \(P\in \Omega \), where \(\Omega \) is an open rectangle or disc. Suppose that \(f\) is continuous on \(\Omega \) and holomorphic on \(\Omega \setminus \{P\}\). Then there is a function \(F\) that is holomorphic on all of \(\Omega \) (including \(P\)) such that \(\frac {\partial F}{\partial z} = f\).

Let \(f=u+iv\) where \(u\) and \(v\) are real valued; then by definition \(u\), \(v\) are continuous on \(\Omega \) and \(C^1\) on \(\Omega \setminus \{P\}\).Then by the Cauchy-Riemann equations, we have that \(\p [u]{x}=\p [v]{y}\). Therefore, by Theorem 2.3.2, there is a \(V\in C^1(\Omega )\) such that \(\p [V]{y}=u\) and \(\p [V]{x}=v\) in all of \(\Omega \). Similarly, \(\p [u]{y}=\p [(-v)]{x}\), and so there is a \(U\in C^1(\Omega )\) such that \(\p [U]{x}=u\) and \(\p [U]{y}=-v\).

Let \(F=U+iV\). Then

\begin{equation*}\p [F]{z} = \frac {1}{2}\biggl (\p [F]{x}+\frac {1}{i}\p [F]{y}\biggr ) =\frac {1}{2}\biggl (u+iv+\frac {1}{i}(-v+iu)\biggr )=f \end{equation*}and\begin{equation*}\p [F]{\bar z} = \frac {1}{2}\biggl (\p [F]{x}-\frac {1}{i}\p [F]{y}\biggr ) =\frac {1}{2}\biggl (u+iv-\frac {1}{i}(-v+iu)\biggr )=0 \end{equation*}in \(\Omega \), as desired.

No. Let \(\Omega =D(0,2)\) and let \(P=0\). Then \(f(z)=1/z\) is holomorphic on \(\Omega \setminus \{P\}\) (see 620Problem 620) and by Problem 2.4a in your book, if \(\gamma (t)=e^{it}\), \(0\leq t\leq 2\pi \), then \(\oint _{\gamma } f(z)\,dz=2\pi i\).But if \(F\) is holomorphic in \(\Omega \setminus \{P\}\) and \(F'=f\), then by 970Problem 970

\begin{align*}\oint _\gamma f(z)\,dz &= \oint _\gamma \frac {\partial F}{\partial z} \,dz=F(\gamma (2\pi ))-F(\gamma (0))=F(1)-F(1)=0.\end{align*}This is a contradiction; therefore, no such \(F\) can exist.

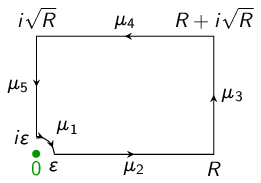

Theorem 2.4.3. [The Cauchy integral theorem.] Let \(f\) be holomorphic in \(D(P,R)\). Let \(\gamma :[a,b]\to D(P,R)\) be a closed curve. Then \(\oint _\gamma f(z)\,dz=0\).

[Chapter 2, Problem 1] Prove the Cauchy integral theorem.

Theorem 2.4.2. [The Cauchy integral formula.] Let \(\Omega \subseteq \C \) be open and let \(\overline D(z_0,r)\subset \Omega \). Let \(f\) be holomorphic in \(\Omega \) and let \(z\in D(z_0,r)\). Then

Lemma 2.4.1. The Cauchy integral formula is true in the special case where \(f(\zeta )=1\) for all \(\zeta \in \C \).

\(\oint _\gamma \frac {1}{\zeta -z_0}\,d\zeta =1\) (this is a routine computation).If \(\zeta \neq x+iy\), then

\begin{align*} \p {x} \frac {1}{\zeta -(x+iy)} &=\p {x}\frac {\overline \zeta -x+iy}{(\re \zeta -x)^2+(\im \zeta -y)^2} \\&=\frac {-|\zeta -(x+iy)|^2+(\overline \zeta -x+iy)(2(\re \zeta -x))}{|\zeta -(x+iy)|^4} \\&=\frac {-|\zeta -(x+iy)|^2+(\overline \zeta -x+iy)((\overline \zeta -x+iy)+(\zeta -x-iy))}{(\zeta -x-iy)^2(\overline \zeta -x+iy)^2} \\&= \frac {1}{(\zeta -(x+iy))^2} .\end{align*}Now, by the previous problem, if \(x+iy\in D(P,r)\), then

\begin{align*} \frac {\partial }{\partial x} \oint _\gamma \frac {1}{\zeta -(x+iy)}\,d\zeta &= \oint _\gamma \p {x}\frac {1}{\zeta -(x+iy)}\,d\zeta \\&= \oint _\gamma \frac {1}{(\zeta -(x+iy))^2}\,d\zeta .\end{align*}Let \(F(\zeta )=\frac {-1}{\zeta -(x+iy)}\). By 620Problem 620 and the chain rule we have that \(\p {\zeta } F(\zeta )=\frac {1}{(\zeta -(x+iy))^2}\). Thus

\begin{align*} \frac {\partial }{\partial x} \oint _\gamma \frac {1}{\zeta -(x+iy)}\,d\zeta &= \oint _\gamma \p {\zeta }\frac {-1}{\zeta -(x+iy)}\,d\zeta \end{align*}which by 970Problem 970 is zero because \(\gamma \) is a closed curve.

Similarly

\begin{align*} \frac {\partial }{\partial y} \oint _\gamma \frac {1}{\zeta -(x+iy)}\,d\zeta &= 0 \end{align*}for all \(x+iy\in D(z_0,r)\). Thus \(\oint _\gamma \frac {1}{\zeta -(x+iy)}\,d\zeta \) (regarded as a function of \(x+iy\)) must be a constant; since it equals \(1\) at \(x+iy=z_0\), it must be \(1\) everywhere.

By 580Problem 580 and 620Problem 620, and by the chain rule (Problem 1.49), if \(n\neq -1\) then\begin{equation*}(\zeta -z)^n=\frac {1}{n+1} \frac {\partial }{\partial \zeta }(\zeta -z)^{n+1}.\end{equation*}Thus\begin{align*}\oint _\gamma (\zeta -z)^n\,d\zeta &= \oint _\gamma \frac {1}{n+1} \frac {\partial }{\partial \zeta }(\zeta -z)^{n+1}\,d\zeta =\frac {(\gamma (2\pi )-z)^{n+1}}{n+1} -\frac {(\gamma (0)-z)^{n+1}}{n+1} =0\end{align*}

If \(n=0\) then the result follows from Lemma 2.4.1. Otherwise, by the binomial theorem\begin{equation*}\zeta ^n=[(\zeta -z)+z]^n = \sum _{k=0}^n \binom {n}{k} z^{k}(\zeta -z)^{n-k}.\end{equation*}Thus

\begin{equation*}\oint _\gamma \frac {\zeta ^n}{\zeta -z}\,d\zeta =\sum _{k=0}^n \binom {n}{k}z^{k}\oint _\gamma (\zeta -z)^{n-k-1}\,d\zeta .\end{equation*}If \(k<n\) then the integral is zero, while if \(k=n\) then the integral is \(2\pi i\) by Lemma 2.4.1, as desired.

Recall [Theorem 2.4.2]: [The Cauchy integral formula.] Let \(\Omega \subseteq \C \) be open and let \(\overline D(z_0,r)\subset \Omega \). Let \(f\) be holomorphic in \(\Omega \) and let \(z\in D(z_0,r)\). Then

[Definition: Integral over a circle] We define \(\oint _{\partial D(P,r)} f(z)\,dz=\oint _\gamma f(z)\,dz\), where \(\gamma \) is a counterclockwise simple parameterization of \(\partial D(P,r)\).

[Chapter 2, Problem 20] Let \(f\) be continuous on \(\overline D(P,r)\) and holomorphic in \(D(P,r)\). Show that \(f(z)=\oint _{\partial D(P,r)} \frac {f(\zeta )}{\zeta -z}\,d\zeta \) for all \(z\in D(P,r)\).

Proposition 2.6.6. Let \(\Omega =D(P,\tau )\setminus \overline D(P,\sigma )\) for some \(P\in \C \) and some \(0<\sigma <\tau \). Let \(\sigma <r<R<\tau \) and let \(\gamma _r\), \(\gamma _R\) be the counterclockwise parameterizations of \(\partial D(P,r)\), \(\partial D(P,R)\). Suppose that \(f\) is holomorphic in \(\Omega \). Then \(\oint _{\gamma _r} f=\oint _{\gamma _R} f\).

[Definition: Homotopic curves] Let \(a<b\), \(c<d\). Let \(\Omega \subseteq \C \) be open. Let \(\gamma _c\), \(\gamma _d:[a,b]\to \Omega \) be two \(C^1\) curves with the same endpoints (so \(\gamma _c(a)=\gamma _d(a)\), \(\gamma _c(b)=\gamma _d(b)\)).

We say that \(\gamma _c\) and \(\gamma _d\) are \(C^1\)-homotopic in \(\Omega \) if there is a function \(\Gamma \) such that:

\(\Gamma :[a,b]\times [c,d]\to \Omega \),

\(\Gamma (t,c)=\gamma _c(t)\), \(\Gamma (t,d)=\gamma _d(t)\) for all \(t\in [a,b]\),

\(\Gamma (a,s)=\gamma _c(a)=\gamma _d(a)\), \(\Gamma (b,s)=\gamma _c(b)=\gamma _d(b)\) for all \(s\in [c,d]\),

\(\Gamma \) is continuous on \([a,b]\times [c,d]\),

\(\Gamma \) is \(C^1\) in the first variable in the sense that if \(\gamma _s(t)=\Gamma (t,s)\), then \(\gamma _s\in C^1[a,b]\) for all \(c\leq s\leq d\).

[Definition: Homotopic closed curves] Let \(a<b\), \(c<d\). Let \(\Omega \subseteq \C \) be open. Let \(\gamma _c\), \(\gamma _d:[a,b]\to \Omega \) be two closed \(C^1\) curves.

We say that \(\gamma _c\) and \(\gamma _d\) are \(C^1\)-homotopic in \(\Omega \) if there is a function \(\Gamma \) such that:

\(\Gamma :[a,b]\times [c,d]\to \Omega \),

\(\Gamma (t,c)=\gamma _c(t)\), \(\Gamma (t,d)=\gamma _d(t)\) for all \(t\in [a,b]\),

\(\Gamma (a,s)=\Gamma (b,s)\) for all \(s\in [c,d]\),

\(\Gamma \) is continuous on \([a,b]\times [c,d]\),

\(\Gamma \) is \(C^1\) in the first variable on \([a,b]\times [c,d]\).

Let \(a<b\), \(c<d\), and let \(\Gamma :[a,b]\times [c,d]\to \Omega \) be continuous.

Assume that, if \(s\in [c,d]\), then \(\gamma _s:[a,b]\to \Omega \) given by \(\gamma _s(t)=\Gamma (t,s)\) is a \(C^1\) curve.

Further assume that the function \(\frac {\partial \Gamma }{\partial t}\) (with \(\frac {\partial \Gamma }{\partial t}(a,s)\) and \(\frac {\partial \Gamma }{\partial t}(b,s)\) given by one-sided derivatives) is also continuous on \([a,b]\times [c,d]\).

Show that the function \(I(s)=\oint _{\gamma _s} f\) is continuous on \([c,d]\).

By definition of line integral,\begin{equation*}\oint _{\gamma _s} f = \int _a^b f(\Gamma (t,s))\,\p {t}\Gamma (t,s)\,dt.\end{equation*}By 760Problem 760, if \(c\leq s\leq d\) then \(\oint _{\gamma _s} f\) is continuous (as a function of \(s\)) at \(s\).

Show that

By definition of line integral,\begin{equation*}\oint _{\gamma _s} f = \int _a^b f(\Gamma (t,s))\,\p {t}\Gamma (t,s)\,dt.\end{equation*}By 770Problem 770,\begin{equation*}\frac {d}{ds}\oint _{\gamma _s} f = \int _a^b \p {s}\biggl (f(\Gamma (t,s))\,\p {t}\Gamma (t,s)\biggr )\,dt.\end{equation*}By the product rule and problem 1120Problem 1120,\begin{align*}\frac {d}{ds}\oint _{\gamma _s} f &= \int _a^b f'(\Gamma (t,s))\,\p {s}\Gamma (t,s)\,\p {t}\Gamma (t,s) +f(\Gamma (t,s))\,\frac {\partial ^2}{\partial s\,\partial t} \Gamma (t,s) \,dt.\end{align*}By Clairaut’s theorem and the product rule,

\begin{equation*}\frac {d}{ds}\oint _{\gamma _s} f = \int _a^b\p {t}\biggl (f(\Gamma (t,s))\,\p {s}\Gamma (t,s)\biggr )\,dt.\end{equation*}By the fundamental theorem of calculus,\begin{align*}\frac {d}{ds}\oint _{\gamma _s} f &= \biggl (f(\Gamma (b,s))\,\p {s}\Gamma (b,s)\biggr ) - \biggl (f(\Gamma (a,s))\,\p {s}\Gamma (a,s)\biggr ) \end{align*}as desired.

By 1382Problem 1382\begin{equation*}\frac {d}{ds}\oint _{\gamma _s} f = \biggl (f(\Gamma (b,s))\,\p {s}\Gamma (b,s)\biggr )- \biggl (f(\Gamma (a,s))\,\p {s}\Gamma (a,s)\biggr ) .\end{equation*}By definition of homotopy between curves with the same endpoints, \(\Gamma (b,s)=\gamma _c(b)\) for all \(s\), and so \(\p {s}\Gamma (b,s)=0\). Similarly \(\p {s}\Gamma (a,s)=0\) and so\begin{equation*}\frac {d}{ds}\oint _{\gamma _s} f = 0\end{equation*}for all \(c<s<d\). Thus by the mean value theorem and 1381Problem 1381, \(\oint _{\gamma _c}f=\oint _{\gamma _d} f\).

By 1381Problem 1381 and 1382Problem 1382, \(\oint _{\gamma _s} f\) is a continuous function of \(s\) and\begin{align*}\frac {d}{ds}\oint _{\gamma _s} f &= \biggl (f(\Gamma (b,s))\,\p {s}\Gamma (b,s)\biggr ) - \biggl (f(\Gamma (a,s))\,\p {s}\Gamma (a,s)\biggr ) .\end{align*}By definition of homotopy between closed curves, \(\Gamma (b,s)=\Gamma (a,s)\) for all \(s\), and so in particular \(\p {s}\Gamma (b,s)=\p {s}\Gamma (a,s)\). Thus

\begin{align*}\frac {d}{ds}\oint _{\gamma _s} f &= \biggl (f(\Gamma (a,s))\,\p {s}\Gamma (a,s)\biggr ) - \biggl (f(\Gamma (a,s))\,\p {s}\Gamma (a,s)\biggr ) =0 .\end{align*}By the mean value theorem, \(\oint _{\gamma _c} f=\oint _{\gamma _d}f\).

Let \(\gamma :[0,1]\to \Omega \) be homotopic in \(\Omega \) to a point (constant function). Show that \(\oint _\gamma f=0\).

Theorem 3.1.3. [Generalization.] Let \(D(P,r)\subset \C \). Let \(\varphi :\partial D(P,r)\to \C \) be continuous. Let \(k\) be a nonnegative integer. Define \(f:D(P,r)\to \C \) by

[Chapter 2, Problem 21] If \(z\in \partial D(P,r)\), is it necessarily true that \(\lim _{w\to z} f(w)=\varphi (z)\)?

Theorem 3.1.1. Let \(\Omega \subseteq \C \) be open and let \(f:\Omega \to \C \) be holomorphic. Then \(f\in C^\infty (\Omega )\). Moreover, if \(\overline D(P,r)\subset \Omega \), then

Corollary 3.1.2. Let \(\Omega \subseteq \C \) be open and let \(f:\Omega \to \C \) be holomorphic. Then \(\frac {\partial ^k f}{\partial z^k}\) is holomorphic in \(\Omega \) for all \(k\in \N \).

Let \(\overline D(P,r)\subset \Omega \). By the Cauchy integral formula, if \(z\in D(P,r)\) then\begin{equation*}f(z)=\frac {1}{2\pi i} \oint _{\partial D(P,r)} \frac {f(\zeta )}{\zeta -z}\,d\zeta .\end{equation*}Because \(f\) is holomorphic, it must be continuous, and so we may apply Theorem 3.1.3.We perform an induction argument. Suppose that \(f\), \(f'=\p [f]{z},\dots ,f^{(k-1)}=\frac {\partial ^{k-1}f}{\partial z^{k-1}}\) exist and are \(C^1\) and holomorphic in \(D(P,r)\), and that \(f^{(k)}=\frac {\partial ^{k}f}{\partial z^{k}}\) exists and satisfies

\begin{equation*}f^{(k)}(z)=\frac {k!}{2\pi i} \oint _{\partial D(P,r)} \frac {f(\zeta )}{(\zeta -z)^{k+1}}\,d\zeta \end{equation*}in \(D(P,r)\). We have shown that this is true for \(k=0\). By Theorem 3.1.3, we have that \(f^{(k)}\) is also \(C^1\) and holomorphic in \(D(P,r)\), and that\begin{equation*}f^{(k+1)}=\frac {(k+1)!}{2\pi i} \oint _{\partial D(P,r)} \frac {f(\zeta )}{(\zeta -z)^{k+2}}\,d\zeta .\end{equation*}Because \(f^{(k)}\) is holomorphic, \(f^{(k+1)}=\frac {\partial ^{k+1}f}{\partial z^{k+1}}\). Thus by induction this must be true for all nonnegative integers \(k\).This proves Corollary 3.1.2 and part of Theorem 3.1.1. To prove that \(f\in C^\infty (\Omega )\), observe that

\begin{equation*}\frac {\partial ^{j+\ell } f}{\partial x^j\partial y^\ell } = \biggl (\p {z}+\p {\bar z}\biggr )^j \biggl (\p {z}-\p {\bar z}\biggr )^\ell f \end{equation*}We can write all partial derivatives of \(f\) (in terms of \(x\) and \(y\)) as linear combinations of \(\frac {\partial ^j f}{\partial z^j}\) and \(\frac {\partial ^{m+n+\ell } f}{\partial x^m\partial y^n\partial \bar z^\ell }\) for various values of \(j\), \(\ell \), \(m\), and \(n\), and so

We must show that \(\nabla f(P)\) exists, that \(\nabla f\) is continuous at \(P\), and that \(\p [f]{\bar z}(P)=0\). That is, we only need to work at \(P\). Let \(\Omega \) be an open disc centered at \(P\) and contained in \(\Omega \); by definition of open set \(\Omega \) must exist. Then by Theorem 2.3.3 ( 1230Problem 1230) there is a function \(F:\Omega \to \C \) that is holomorphic in \(\Omega \) such that \(f=\p [F]{z}\) in \(\Omega \) (including at \(P\)). By Theorem 3.1.1 and Corollary 3.1.2 ( 1440Problem 1440), \(f=\p [F]{z}\) is \(C^1\) and holomorphic in \(\Omega \), and in particular at \(P\).

Theorem 3.1.4. (Morera’s theorem.) Let \(\Omega \subseteq \C \) be open and connected. Let \(f\in C(\Omega )\) be such that \(\oint _\gamma f=0\) for all closed curves \(\gamma \). Then \(f\) is holomorphic in \(\Omega \).

Fix some \(z_0\in \Omega \). Suppose that \(z\in \Omega \). By 1460Memory 1460, there is a \(C^1\) curve \(\psi =\psi _z:[0,1]\to \Omega \) such that \(\psi (0)=z_0\) and \(\psi (1)=z\).Suppose that \(\tau \) is another such curve, that is, a \(C^1\) function \(\tau :[0,1]\to \Omega \) such that \(\tau (0)=z_0\) and \(\tau (1)=z\). Let \(\tau _{-1}(t)=\tau (1-t)\). Then by 1010Problem 1010, we have that \(\oint _{\tau _{-1}} f=-\oint _{\tau } f\). Furthermore, by 1050Problem 1050, there is a \(C^1\) curve \(\gamma :[0,1]\to \C \) such that \(\gamma (0)=\psi (0)=z_0\), \(\gamma (1)=\tau _{-1}(1)=\tau (0)=z_0\) and such that

\begin{equation*}\oint _\gamma f=\oint _\psi f+\oint _{\tau _{-1}}f=\oint _\psi f-\oint _\tau f.\end{equation*}But \(\gamma \) is closed and so \(\oint _\gamma f=0\), and so \(\oint _\psi f=\oint _\tau f\).Thus, if we define \(F(z)=\oint _{\psi _z} f\), then \(F\) is well defined, as \(F(z)\) is independent of our choice of path \(\psi _z\) from \(z_0\) to \(z\).

Now, let \(z\in \Omega \) and let \(r>0\) be such that \(D(z,r)\subseteq \Omega \); such an \(r\) must exist by definition of \(\Omega \). If \(w\in D(z,r)\setminus \{z\}\), then

\begin{equation*}F(w)-F(z)=\oint _{\psi _w} f-\oint _{\psi _z} f.\end{equation*}Let \(\varphi (t)=z+t(w-z)\), so \(\varphi :[0,1]\to D(z,r)\) is a \(C^1\) path from \(z\) to \(w\). We may assume without loss of generality that \(\psi _w\) is generated from \(\psi _z\) and \(\varphi \) by 1050Problem 1050; thus,\begin{equation*}\frac {F(w)-F(z)}{w-z}=\frac {1}{w-z}\oint _{\varphi } f =\int _0^1 f(z+t(w-z))\,dt .\end{equation*}A straightforward \(\varepsilon \)-\(\delta \) argument yields that\begin{equation*}\lim _{w\to z}\frac {F(w)-F(z)}{w-z}=f(z)\end{equation*}so \(F\) possesses a complex derivative at \(z\). Furthermore, \(F'=f\) is continuous on \(\Omega \). Thus \(F\in C^1(\Omega )\) and is holomorphic on \(\Omega \) by 1130Problem 1130, and so by Theorem 3.1.1 and Corollary 3.1.2 \(f=F'\) is \(C^\infty \) (in particular \(C^1\)) and holomorphic in \(\Omega \).

[Definition: Taylor series] Let \(f\in C^\infty (a,b)\) and let \(a<c<b\). The Taylor series for \(f\) at \(c\) is \(\sum _{n=0}^\infty \frac {f^{(n)}(c)}{n!}(x-c)^n\) (with the convention \(0^0=1\)).

The function\begin{equation*}f(x)=\begin {cases} \exp (-1/x^2),&x\neq 0,\\0, &x=0\end {cases}\end{equation*}satisfies \(f^{(n)}(0)=0\) for all nonnegative integers \(n\), and thus the Taylor series is zero everywhere; however, \(f(x)\neq 0\) if \(x\neq 0\) and so the Taylor series never converges to the function.

[Definition: Absolute convergence] Let \(\sum _{n=0}^\infty a_n\) be a series of real numbers. If \(\sum _{n=0}^\infty |a_n|\) converges, then we say \(\sum _{n=0}^\infty a_n\) converges absolutely.

[Definition: Uniform convergence] Let \(E\) be a set, let \((X,d)\) be a metric space, and let \(f_k\), \(f:E\to X\). We say that \(f_k\to f\) uniformly on \(E\) if for every \(\varepsilon >0\) there is a \(N\in \N \) such that if \(k\geq N\), then \(d(f_k(z),f(z))<\varepsilon \) for all \(z\in E\).

[Definition: Uniformly Cauchy] Let \(E\) be a set, let \((X,d)\) be a metric space, and let \(f_k:E\to X\). We say that \(\{f_k\}_{k=1}^\infty \) is uniformly Cauchy on \(E\) if for every \(\varepsilon >0\) there is a \(N\in \N \) such that if \(n>m\geq N\), then \(d(f_n(z),f-m(z))<\varepsilon \) for all \(z\in E\).

[Definition: Uniform convergence and Cauchy for series] If \(E\) is a set, \(V\) is a vector space, and \(f_k:E\to V\) for each \(k\in \N \), then the series \(\sum _{k=1}^\infty f_k\) converges uniformly to \(f:E\to V\) or is uniformly Cauchy, respectively, if the sequences of partial sums \(\bigl \{\sum _{k=1}^n f_k\bigr \}_{n=1}^\infty \) converge uniformly or are uniformly Cauchy.

Let \(a_n=x_n+iy_n\) where \(x_n\), \(y_n\in \R \). Then \(|x_n|\leq |a_n|\) and \(|y_n|\leq |a_n|\). We recall from real analysis that a nondecreasing sequence converges if and only if it is bounded. Therefore \(\{\sum _{n=0}^m |a_n|\}_{m\in \N }\) is bounded. Because \(|x_n|\leq |a_n|\), we have that \(\sum _{n=0}^m |x_n|\leq \sum _{n=0}^m|a_n|\leq \sup _{m\in \N } \sum _{n=0}^m |a_n|\) and so \(\{\sum _{n=0}^m |x_n|\}_{m\in \N }\) is bounded. Thus \(\sum _{n=0}^\infty x_n\) converges absolutely, and therefore \(\sum _{n=0}^\infty x_n\) converges. Similarly, \(\sum _{n=0}^\infty y_n\) converges. By 220Problem 220, \(\sum _{n=0}^\infty a_n\) converges.

Definition 3.2.2. (Complex power series.) A complex power series is a formal sum \(\sum _{k=0}^\infty a_k (z-P)^k\) for some \(\{a_k\}_{k=1}^\infty \subseteq \C \). The series converges at \(z\) if \(\lim _{n\to \infty } \sum _{k=0}^n a_k (z-P)^k\) exists.

Lemma 3.2.3. Suppose that the series \(\sum _{k=0}^\infty a_k (z-P)^k\) converges at \(z=w\) for some \(w\in \C \). Then the series converges absolutely at \(z\) for all \(z\) with \(|z-P|<|w-P|\).

Proposition 3.2.9. Suppose that the series \(\sum _{k=0}^\infty a_k (z-P)^k\) converges at \(z=w\) for some \(w\in \C \). If \(0<r<|w-P|\), then the series converges uniformly on \(\overline D(P,r)\).

Because \(\sum _{k=0} a_k(w-P)^k\) converges, we have that \(\lim _{k\to \infty } a_k(w-P)^k=0\). In particular, \(\{a_k(w-P)^k\}_{k=0}^\infty \) is bounded. Let \(A=\sup _{k\geq 0} |a_k(w-P)^k|\).Because \(0<r/|w-P|<1\), the geometric series \(\sum _{k=0}^\infty A(r/|w-P|)^k\) converges. Thus for every \(\varepsilon >0\) there is an \(N>0\) such that \(\sum _{k=N}^\infty A(r/|w-P|)^k<\varepsilon \).

If \(z\in \overline D(P,r)\), then \(|z-P|\leq r\) and so \(|a_k(z-P)^k| \leq |a_k(w-P)^k | (r/|w-P|)^k\leq A(r/|w-P|)^k\). We then have that \(\sum _{k=N}^\infty |a_k(z-P)^k|\leq \sum _{k=N}^\infty A(r/|w-P|)^k<\varepsilon \) for all \(z\in \overline D(P,r)\). Furthermore, by Lemma 3.2.3 and 1600Problem 1600 \(\sum _{k=0}^\infty a_k(z-P)^k\) exists, and if \(m\geq N\) then

\begin{align*}\Bigl |\sum _{k=0}^\infty a_k(z-P)^k-\sum _{k=0}^m a_k(z-P)^k\Bigr | &=\Bigl |\sum _{k=m+1}^\infty a_k(z-P)^k\Bigr | \leq \sum _{k=m+1}^\infty |a_k(z-P)^k| <\varepsilon .\end{align*}Thus the series converges uniformly on \(\overline D(P,r)\).

Suppose for the sake of contradiction that the series converges at \(z\). By Lemma 3.2.3 with \(z\) and \(w\) interchanged, we know that the series converges at \(w\). But we assumed that the series diverged at \(w\), a contradiction. Therefore the series must diverge at \(z\).

Definition 3.2.4. (Radius of convergence.) The radius of convergence of \(\sum _{k=0}^\infty a_k (z-P)^k\) is \(\sup \{|w-P|:\sum _{k=0}^\infty a_k (w-P)^k\) converges\(\}\).

Let \(R_1=\sup \{|w-P|:\sum _{k=0}^\infty a_k (w-P)^k\) converges\(\}\), \(R_2=\inf \{|\zeta -P|:\sum _{k=0}^\infty a_k (\zeta -P)^k\) diverges\(\}\).If \(r\in \{|w-P|:\sum _{k=0}^\infty a_k (w-P)^k\) converges\(\}\), then \(r=|w-P|\) for some \(w\) such that the series converges. If the series diverges at \(\zeta \), then \(|\zeta -P|\geq |w-P|\) by Lemma 3.2.3, and so \(r\) is a lower bound on \(\{|\zeta -P|:\sum _{k=0}^\infty a_k (\zeta -P)^k\) diverges\(\}\). Thus \(r\leq R_2\). So \(R_2\) is an upper bound on \(\{|w-P|:\sum _{k=0}^\infty a_k (w-P)^k\) converges\(\}\), and so \(R_2\geq R_1\).

If \(R_2>R_1\), let \(z\in \C \) be such that \(R_1<|z-P|<R_2\). Then the series either converges or diverges at \(z\). If it converges, then \(|z-P|\in \{|w-P|:\sum _{k=0}^\infty a_k (w-P)^k\) converges\(\}\), and so \(|z-P|\leq R_1\), a contradiction. We similarly derive a contradiction if the series diverges at \(z\), and so we must have that \(R_2=R_1\), as desired.

If \(\limsup _{n\to \infty } \sqrt [n]{|b_n|} <1\), then \(\sum _{n=0}^\infty b_n\) converges absolutely.

If \(\limsup _{n\to \infty } \sqrt [n]{|b_n|}>1\), then the sequence \(\{b_n\}_{n=0}^\infty \) is unbounded (and in particular the series \(\sum _{n=0}^\infty b_n\) diverges).

What does the root test tell us about complex power series?

The root test for real numbers states that if \(\{b_n\}_{n=0}^\infty \subset \R \), then

If \(\limsup _{n\to \infty } \sqrt [n]{|b_n|} <1\), then \(\sum _{n=0}^\infty b_n\) converges absolutely.

If \(\limsup _{n\to \infty } \sqrt [n]{|b_n|}>1\), then the sequence \(\{b_n\}_{n=0}^\infty \) is unbounded (and in particular the series \(\sum _{n=0}^\infty b_n\) diverges).

Let \(\sum _{k=0}^\infty a_k(z-P)^k\) be a complex power series. Fix a \(z\in \C \) and observe that

\begin{equation*}\limsup _{k\to \infty }\sqrt [k]{|a_k(z-P)^k|} =|z-P|\limsup _{k\to \infty }\sqrt [k]{|a_k|}.\end{equation*}Thus the series \(\sum _{k=0}^\infty a_k(z-P)^k\) converges if \(|z-P|\limsup _{k\to \infty }\sqrt [k]{|a_k|}<1\) and diverges if \(|z-P|\limsup _{k\to \infty }\sqrt [k]{|a_k|}>1\). Thus the radius of convergence must be

\begin{equation*}\frac {1}{\limsup _{k\to \infty }\sqrt [k]{|a_k|}}\end{equation*}with the convention that \(\frac {1}{0}=\infty \) and \(\frac {1}{\infty }=0\); that is, if \(\limsup _{k\to \infty }\sqrt [k]{|a_k|}=0\) then the series converges everywhere and if \(\limsup _{k\to \infty }\sqrt [k]{|a_k|}=\infty \) then the series diverges unless \(z=P\).

The ratio test for real numbers states that if \(\{b_n\}_{n=0}^\infty \subset \R \), then

If \(\lim _{n\to \infty } \frac {|b_{n+1}|}{|b_n|}\) exists and is less than \(1\), then \(\sum _{n=0}^\infty b_n\) converges absolutely.

If \(\lim _{n\to \infty } \frac {|b_{n+1}|}{|b_n|}\) exists and is greater than \(1\), then the sequence \(\{b_n\}_{n=0}^\infty \) is unbounded (and in particular the series \(\sum _{n=0}^\infty b_n\) diverges).

Let \(\sum _{k=0}^\infty a_k(z-P)^k\) be a complex power series. Fix a \(z\in \C \setminus \{P\}\) and observe that if either \(\lim _{k\to \infty } \frac {|a_{k+1}(z-P)^{k+1}|}{|a_k(z-P)^k|}\) or \(\lim _{k\to \infty }\frac {|a_{k+1}|}{|a_k|}\) exists, then the other must exist and

\begin{equation*}\lim _{k\to \infty } \frac {|a_{k+1}(z-P)^{k+1}|}{|a_k(z-P)^k|} = |z-P|\lim _{k\to \infty }\frac {|a_{k+1}|}{|a_k|}.\end{equation*}Thus, the series converges absolutely if \(|z-P|<\lim _{k\to \infty }\frac {|a_k|}{|a_{k+1}|}\) and diverges if \(|z-P|>\lim _{k\to \infty }\frac {|a_k|}{|a_{k+1}|}\), so the radius of convergence must be \(\lim _{k\to \infty }\frac {|a_k|}{|a_{k+1}|}\).

Lemma 3.2.10. Let \(\sum _{k=0}^\infty a_k (z-P)^k\) be a power series with radius of convergence \(R>0\). Define \(f:D(P,R)\to \C \) by \(f(z)=\sum _{k=0}^\infty a_k (z-P)^k\).

Then \(f\) is \(C^\infty \) and holomorphic in \(D(P,R)\), and if \(n\in \N \) then the series

If \(m\in \N \), define \(f_m(z)=\sum _{k=0}^m a_k(z-P)^k\). Then each \(f_m\) is continuous. By Proposition 3.2.9, if \(0<r<R\) then \(f_m\to f\) uniformly on \(\overline D(P,r)\).By 1560Memory 1560, we have that \(f\) must be continuous on \(\overline D(P,r)\). But if \(z\in D(P,R)\), then \(|z-P|<R\) and so there is a \(\varepsilon >0\) and an \(r<R\) such that \(D(z,\varepsilon )\subset \overline D(P,r)\), and so \(f\) is continuous at \(z\) for all \(z\in D(P,R)\). Thus \(f\) is continuous on \(D(P,R)\).

[Definition: Taylor series] Let \(P\in \Omega \subseteq \C \) where \(\Omega \) is open, and let \(f\) be holomorphic in \(\Omega \). By Theorem 3.1.1, \(f^{(n)}\) exists everywhere in \(\Omega \). The Taylor series for \(f\) at \(P\) is the power series \(\sum _{k=0}^\infty \frac {f^{(k)}(P)}{k!}(z-P)^k\).

Proposition 3.2.11. Suppose that the two power series \(\sum _{k=0}^\infty a_k (z-P)^k\) and \(\sum _{k=0}^\infty b_k (z-P)^k\) both have positive radius of convergence and that there is some \(r>0\) such that \(\sum _{k=0}^\infty a_k (z-P)^k=\sum _{n=0}^\infty b_k (z-P)^k\) (and both sums converge) whenever \(|z-P|<r\). Then \(a_k=b_k\) for all \(k\).

We will solve the previous two problems using a single calculation. Let \(f(z)=\sum _{k=0}^\infty a_k(z-P)^k\) in \(D(P,r)\). Then by Lemma 3.2.10, we must have that \(f\) is holomorphic in \(D(P,r)\), and if \(n\geq 0\) is an integer then\begin{equation*}\frac {f^{(n)}(P)}{n!} =\sum _{k=n}^\infty \frac {k!}{n!(k-n)!} a_k (P-P)^{k-n} .\end{equation*}Recall that in power series we define \(0^0=1\). If \(k>n\) then \((P-P)^{k-n}=0\), and so we have that\begin{equation*}\frac {f^{(n)}(P)}{n!} =\sum _{k=n}^n \frac {k!}{n!(k-n)!} a_k (P-P)^{k-n} =a_n .\end{equation*}Thus the Taylor series for \(f\) at \(P\) by definition is\begin{equation*}\sum _{n=0}^\infty \frac {f^{(n)}(P)}{n!} (z-P)^n = \sum _{n=0}^\infty a_n (z-P)^n.\end{equation*}This completes Problem 1700Problem 1700.Furthermore, if \(a_n\) and \(b_n\) are as in Proposition 3.2.11 and \(f(z)=\sum _{k=0}^\infty a_k(z-P)^k=\sum _{k=0}^\infty b_k(z-P)^k\) in \(D(P,r)\) for some \(r>0\), then \(a_n=\frac {f^{(n)}(P)}{n!}=b_n\) and so \(a_n=b_n\) for all \(n\).

[Definition: Analytic function] Let \(\Omega \subseteq \C \) be open and let \(f:\Omega \to \C \) be a function. If for every \(P\in \Omega \) there is a \(r>0\) with \(D(P,r)\subseteq \Omega \) and a sequence \(\{a_n\}_{n=1}^\infty \subset \C \) such that \(f(z)=\sum _{n=0}^\infty a_n (z-P)^n\) for all \(z\in D(P,r)\), we say that \(f\) is analytic.

(We will show in Section 3.3 that \(\mathop {\widetilde {\mathrm {exp}}} (z)=\exp (z)\), where \(\exp (z)\) is as defined in Section 1.2.)

Theorem 3.3.1. Let \(\Omega \subseteq \C \) be an open set and let \(f\) be holomorphic in \(\Omega \). Let \(D(P,r)\subseteq \Omega \) for some \(r>0\).

Then the Taylor series for \(f\) at \(P\) has radius of convergence at least \(r\) and converges to \(f(z)\) for all \(z\in D(P,r)\).